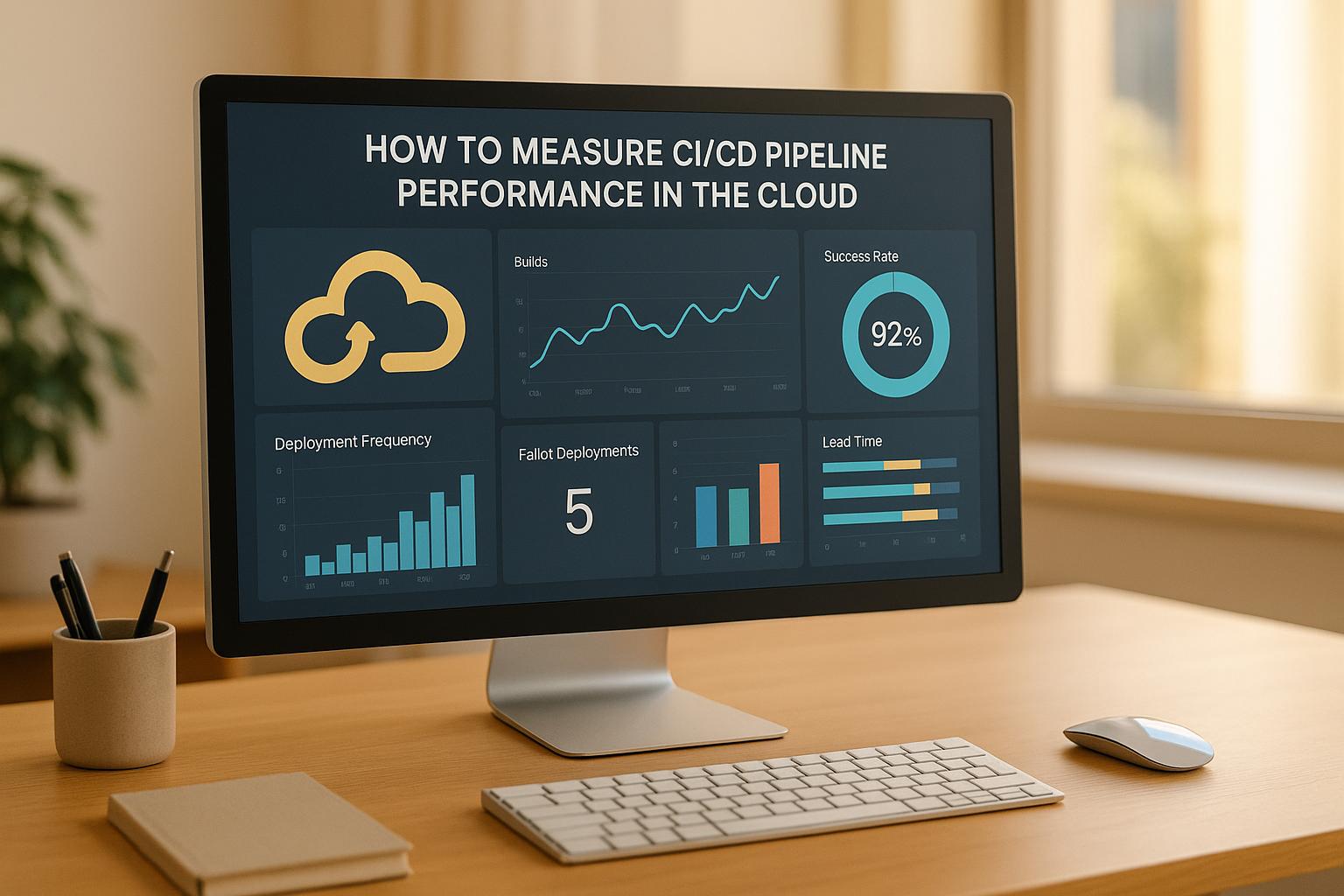

Measuring CI/CD pipeline performance in the cloud boils down to tracking critical metrics, improving workflows, and managing costs effectively. Here's a quick summary of what you need to know:

- Key Metrics: Focus on build time, deployment frequency, lead time for changes, success/failure rates, and mean time to recovery (MTTR). For cloud-specific insights, track CPU/memory usage, network throughput, storage I/O, auto-scaling events, and regional performance differences.

- Tools: Popular options include Prometheus + Grafana (flexible, Kubernetes-friendly), AWS CloudWatch (AWS-native), Azure Monitor (for Azure setups), Datadog (multi-cloud), and New Relic (deployment impact tracking).

- Cost Management: Optimise resource usage (CPU/memory), reduce storage/network costs, and avoid idle resources to save money.

- Automation: Use infrastructure-as-code tools, automated alerts, and dashboards to streamline monitoring and ensure consistency.

How Do You Monitor CI/CD Pipeline Performance Effectively? - Next LVL Programming

Key Metrics for CI/CD Pipeline Performance

Tracking the right metrics is crucial for improving your CI/CD pipeline's performance, especially in cloud environments. These metrics provide insight into operational efficiency and cost management, enabling teams to make informed decisions about their deployment processes. Below, we break down the key metrics into two categories: core performance indicators and cloud-specific considerations.

Core CI/CD Performance Metrics

To gauge the efficiency of your pipeline, start with these essential metrics:

Build Time: This measures how long it takes to compile, test, and package your code. In cloud environments, build times can fluctuate based on resource allocation and workload distribution. Monitoring average and peak build times can help uncover performance bottlenecks.

Deployment Frequency: This tracks how often successful releases are deployed to production. High-performing teams often deploy multiple times a day, while less mature processes may only allow for weekly or even monthly deployments. This metric reflects your delivery speed.

Lead Time for Changes: This measures the time from a code commit to its deployment in production. It covers the entire process, including development, testing, approval, and deployment. Shorter lead times indicate efficient workflows and faster feedback cycles.

Success and Failure Rates: These metrics show the percentage of successful builds and deployments, as well as common failure reasons, such as test errors or configuration issues.

Mean Time to Recovery (MTTR): This measures how quickly your team can resolve issues after a failed deployment or system problem. In cloud environments, automated rollback features can significantly reduce recovery times when configured correctly.

Cloud-Specific Metrics to Track

Cloud environments present unique challenges and opportunities, requiring additional metrics to ensure optimal performance and cost management:

CPU Utilisation: This tracks processor usage during builds and deployments. Under-provisioning can slow performance, while over-provisioning wastes money. Since most cloud providers charge based on compute time, optimising CPU usage can lead to cost savings.

Memory Usage Patterns: Understanding how much memory your processes consume helps you select appropriate instance sizes. Memory bottlenecks can slow or fail builds, while over-allocating memory increases costs unnecessarily.

Network Throughput: This measures the rate of data transfer during builds and deployments, especially when moving large artifacts across cloud regions or downloading external dependencies. High throughput may indicate opportunities to optimise storage or implement caching.

Storage I/O Metrics: These track read and write speeds for accessing source code, build artifacts, or test data. Cloud storage performance varies depending on the type and configuration, so identifying bottlenecks here can improve efficiency.

Auto-Scaling Events: This monitors how often your infrastructure scales up or down to meet demand. Frequent scaling events might suggest the need for better capacity planning or adjustments in instance types.

Regional Performance Variations: For teams deploying across multiple cloud regions, comparing build and deployment times across locations can highlight regional issues or opportunities for improvement.

Metrics Comparison Table

| Metric | Definition | Primary Use Case | Cloud Relevance |

|---|---|---|---|

| Build Time | Time from code commit to completed build | Identifying bottlenecks | High - impacts compute costs |

| Deployment Frequency | Successful deployments per time period | Measuring delivery speed | Medium - affects resource planning |

| Lead Time for Changes | Time from commit to production deployment | Overall process efficiency | High - includes provisioning time |

| Success Rate | Successful pipeline executions (%) | Quality and reliability assessment | High - failed builds waste resources |

| MTTR | Time to restore service after failure | Incident response effectiveness | High - downtime costs in cloud |

| CPU Utilisation | Processor use during execution | Resource optimisation | Very High - directly affects costs |

| Memory Usage | RAM consumption during execution | Instance sizing decisions | Very High - impacts instance choice |

| Network Throughput | Data transfer rate during execution | Connectivity optimisation | Medium - affects transfer costs |

| Storage I/O | Read/write operations per second | Storage performance tuning | Medium - impacts build speed |

| Auto-scaling Events | Frequency of infrastructure scaling | Capacity planning | High - indicates cost efficiency |

These metrics not only measure performance but also guide decisions to reduce cloud expenses and improve workflows. The metrics you focus on should match your team's goals. For instance, teams aiming for speed might prioritise deployment frequency and lead time, while cost-conscious teams may focus on resource usage. Striking the right balance between operational and financial metrics ensures both efficiency and cost-effectiveness.

Tools and Techniques for Measuring CI/CD Metrics

Selecting the right tools and automation techniques is essential for accurately tracking CI/CD metrics in cloud environments. With a variety of monitoring solutions available, it's important to align your choice with your infrastructure and budget. A well-thought-out strategy can provide actionable insights without adding unnecessary complexity.

Monitoring Tools for CI/CD Pipelines

Prometheus and Grafana are popular open-source tools for monitoring CI/CD pipelines. Prometheus collects time-series data from pipeline components, while Grafana handles visualisation. This combination is especially effective in Kubernetes environments, where Prometheus can automatically discover and monitor pipeline pods. While this setup offers flexibility and cost control over metric fees, it requires technical expertise to implement and maintain.

AWS CloudWatch is a natural fit for AWS-based CI/CD pipelines, automatically gathering metrics from services like CodeBuild, CodeDeploy, and Lambda. It provides built-in dashboards for standard pipeline metrics and supports custom metrics via API. Its usage-based pricing is economical for smaller deployments but can become costly with a large volume of custom metrics. While CloudWatch integrates seamlessly with AWS, it may not be the best choice for multi-cloud or hybrid setups.

Azure Monitor is tailored for Azure DevOps pipelines and services within Microsoft Azure. It includes tools like Application Insights for performance monitoring and Log Analytics for querying logs. Pricing is based on data ingestion and retention needs. Azure Monitor stands out for its ability to correlate infrastructure metrics with application performance, offering a detailed view of how pipelines affect end-user experiences.

Datadog is a commercial solution that supports multiple cloud providers and on-premises infrastructure. It comes with pre-built integrations for tools like Jenkins, GitLab CI, and GitHub Actions, enabling correlation of metrics across the entire tech stack. Datadog uses a per-host pricing model, and while it includes advanced features like anomaly detection and automated alerts, costs can escalate for larger deployments.

New Relic focuses on application performance monitoring while also providing infrastructure insights. It automatically tracks how deployments impact key metrics such as response times and error rates. With its deployment tracking feature, teams can link code changes to performance variations, making it easier to pinpoint problematic releases. Pricing is based on data ingestion, with potential additional costs for extended data retention.

Once you've chosen your tools, automation can further enhance your monitoring efforts.

Automation for Continuous Monitoring

Automation shifts monitoring from a reactive chore to a proactive, scalable process. By using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation, you can define monitoring configurations alongside your pipeline infrastructure. This ensures consistency across environments and simplifies replication for new projects or teams.

Automated alerting is another key strategy. Notifications can be triggered when metrics exceed predefined thresholds, enabling teams to address issues quickly. It's important to fine-tune these thresholds to avoid alert fatigue

while ensuring genuine problems are flagged. For instance, you might set build time alerts to trigger when durations exceed 150% of historical averages, accounting for natural variations without relying on fixed limits.

Integrating monitoring tools directly into your CI/CD pipeline can involve adding agents to build containers, setting up webhook notifications, or using pipeline plugins that automatically send metrics to your chosen platform. To avoid slowing down your builds, aim to keep monitoring overhead below 5% of total build time.

Automated dashboards are another powerful tool. Platforms like Grafana allow you to maintain dashboards using version-controlled configuration files. This ensures updates to dashboards follow the same review and approval processes as code changes, keeping everything consistent across teams.

Tool Comparison Table

| Tool | Pricing Model | Best For | Cloud Support | Setup Complexity | Key Strengths |

|---|---|---|---|---|---|

| Prometheus + Grafana | Infrastructure cost-based | Kubernetes environments | Multi-cloud | High | Flexible, cost-efficient monitoring |

| AWS CloudWatch | Usage-based (per custom metric) | AWS pipelines | AWS | Low | Seamless AWS integration |

| Azure Monitor | Usage-based (data ingestion and storage) | Azure DevOps pipelines | Primarily Azure | Low | Correlation of application and infrastructure data |

| Datadog | Per-host usage-based | Multi-cloud environments | All major clouds | Medium | Advanced alerting and cross-stack visibility |

| New Relic | Usage-based (data ingestion) | Application performance focus | All major clouds | Medium | Deployment impact tracking |

The best tool for your needs will depend on your existing infrastructure and your team's expertise. Teams heavily invested in a specific cloud provider often benefit from using that provider's monitoring tools. Meanwhile, organisations running complex, multi-cloud environments might prefer platform-agnostic options. Start by focusing on a core set of metrics and expand gradually - this will help your team build confidence with the tools without overwhelming processes. Combining the right tools with automation ensures effective and scalable CI/CD pipeline monitoring.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Step-by-Step Guide to Performance Tracking Setup

Creating an efficient performance tracking system for your CI/CD pipelines involves setting clear goals, integrating the right tools, and committing to continuous improvement.

Setting Performance Targets

Before diving into monitoring tools, it’s important to establish baseline measurements and define performance targets. Begin by running your pipeline for about two weeks, collecting data on build times, deployment frequency, and failure rates.

When setting these targets, align them with your business goals. For instance, a financial services company might prioritise a 99.5% success rate with 45-minute builds, while a social media platform could focus on achieving 20+ deployments per day with builds under 10 minutes.

Consider your team’s experience level when deciding on targets. If you’re working with a newer team, start with achievable goals, like cutting build times by 15% over three months. More seasoned teams can aim higher, such as reducing build times to under five minutes or exceeding industry deployment benchmarks.

Using SMART goals (Specific, Measurable, Achievable, Relevant, Time-bound) can make a big difference. For example, instead of saying, “improve build speed,” set a target like, “reduce average build time from 12 minutes to 8 minutes within six weeks.” This approach ensures clarity and accountability.

Document these targets in a shared space where everyone on the team can access them. Include the reasoning behind each target and how it ties to broader business objectives. This documentation will not only help during performance reviews but also serve as a guide for new team members.

Once your goals are in place, the next step is integrating tools to automate metric collection.

Integrating Monitoring Tools and Automating Data Collection

To track performance effectively, you’ll need to integrate monitoring tools into your CI/CD pipeline. While the steps may vary depending on the platform, the general principles remain consistent.

For AWS-based pipelines, enable CloudWatch in your CodeBuild and CodeDeploy configurations. Add the CloudWatch agent to your build environments, update your buildspec.yml files with metric publishing commands, and ensure the necessary permissions are in place.

If you’re working in Kubernetes environments, deploy Prometheus using Helm charts. Configure it to scrape metrics from your CI/CD pods and use the Prometheus Operator for declarative management of monitoring settings. ServiceMonitor resources can automatically discover and monitor new pipeline components.

For teams managing multi-cloud setups, platform-agnostic tools like Datadog can be a great choice. Install Datadog agents across your infrastructure and use their API to send custom metrics directly from your pipeline scripts. This ensures consistent monitoring across environments.

Automating data collection is key to reducing manual work and ensuring reliable metrics. Write scripts that gather and format data at critical pipeline stages. For example, you could create a post-build script that calculates test coverage and sends the results to your monitoring tool. Keep these scripts lightweight - aim for runtimes under 30 seconds.

To make monitoring actionable, create real-time dashboards. Use infrastructure-as-code techniques to define and version-control your dashboard configurations. This ensures consistency across environments and allows easy rollbacks if needed.

Best Practices for Continuous Optimisation

Once your tracking system is up and running, focus on ongoing optimisation by leveraging dashboards and analysing trends.

Use dashboards with role-specific views to keep things clear and focused. Limit each dashboard to 6–8 key metrics to avoid overwhelming users.

Trend analysis is invaluable for spotting performance patterns that might be missed during daily monitoring. Set up reports comparing current metrics to historical data, like weekly or monthly trends. For example, a gradual increase in build times could indicate technical debt or scaling issues. Weekly reviews can help teams address these problems before they escalate.

Encourage cross-team collaboration by hosting monthly performance review meetings. These sessions should bring together developers, operations teams, and business stakeholders to discuss metrics, identify bottlenecks, and agree on improvement priorities. Document these discussions and assign specific tasks to ensure follow-through.

Set up threshold-based alerts to catch performance issues early. For instance, you could configure alerts to trigger if build times exceed 125% of the rolling 7-day average. This approach accounts for normal fluctuations while flagging genuine problems.

Incorporate performance testing into your regular CI/CD processes. Add benchmarks to your test suites and fail builds that don’t meet performance standards. This helps maintain quality and prevents issues from reaching production.

Regularly review your metrics to ensure they remain relevant. Schedule quarterly checks to remove outdated metrics and add new ones that align with evolving business priorities. This keeps your monitoring focused and prevents cluttered dashboards.

Finally, maintain documentation and share knowledge across the team. Create runbooks that explain how to interpret metrics and respond to threshold breaches. This not only aids in incident response but also helps new team members understand your approach to monitoring.

Using Data to Optimise Workflows and Costs

Once you've gathered detailed performance data from your CI/CD pipelines, the next step is turning those insights into practical improvements. These metrics act as a guide for refining efficiency and managing costs across your cloud infrastructure.

Finding Bottlenecks and Inefficiencies

Performance data often highlights issues that can be hard to spot during daily operations. For example, long queue times might point to insufficient compute resources during peak usage. If unit tests consistently take longer to run than integration tests, it could be worth re-evaluating their design or introducing parallel execution to speed things up.

By analysing resource usage and failure trends, you can pinpoint areas of over-provisioning, recurring problems, or bottlenecks. Addressing these not only improves performance but also trims costs. For instance, if deployment activities cluster at specific times, staggering releases or adjusting deployment strategies could smooth resource demands.

Pipeline dependencies are another area to scrutinise. If one pipeline frequently waits for another, consider whether those dependencies are necessary or if tasks could run in parallel. Insights like these are directly tied to the cost-saving measures discussed below.

Reducing Cloud Costs Through Optimisation

Cutting costs starts with fixing inefficiencies. Performance data can reveal plenty of opportunities to save money in cloud environments. One common approach is right-sizing compute resources - adjusting capacity to match actual usage patterns. For example, if your build agents are underutilised, switching to smaller instance types could lower costs without affecting performance.

Patterns in build durations might also suggest using spot instances for non-critical pipelines that can handle occasional interruptions. Spot instances are a cost-effective option for workloads with flexible timing.

Storage costs can be reduced by reviewing artefact retention policies. If build artefacts are rarely accessed, applying lifecycle rules can help lower ongoing storage expenses. Similarly, optimising caching strategies can reduce data transfer costs.

Network traffic analysis often uncovers ways to cut data transfer charges. Caching frequently used dependencies locally or using regional repositories can minimise cross-region transfer fees - particularly helpful for teams spread across multiple locations.

Idle resources are another area to monitor. For example, development environments running 24/7 but only actively used during work hours could benefit from automated scheduling. Additionally, scheduling non-urgent tasks during off-peak times can take advantage of lower pricing tiers and reduced resource competition.

Hokstad Consulting's CI/CD and Cloud Optimisation Services

Metrics-driven optimisation not only improves workflows but also supports tailored consultancy solutions. By leveraging performance data, Hokstad Consulting offers services designed to enhance the efficiency of your CI/CD pipelines.

Hokstad Consulting specialises in turning CI/CD performance metrics into actionable improvements. Their DevOps transformation services focus on building automated CI/CD pipelines with integrated monitoring, ensuring you're collecting the right data from the outset.

Their cloud cost engineering services aim to reduce expenses by combining performance metrics with spending data. This includes detailed infrastructure audits to uncover optimisation opportunities and implementing cost-effective solutions - all while maintaining performance levels.

For bottlenecks identified through performance analysis, Hokstad Consulting provides custom development and automation solutions. Whether it’s creating bespoke monitoring dashboards, enabling automated resource scaling, or crafting tailored deployment workflows, these services are designed to meet your specific needs.

A standout feature is their No Savings, No Fee

engagement model, which ensures that Hokstad Consulting's success is directly tied to your cost-saving outcomes. They also offer ongoing support through retainer agreements, providing regular performance reviews and updates to keep your infrastructure running efficiently as it evolves.

For businesses in the UK, Hokstad Consulting brings a deep understanding of local compliance and operational requirements. Their expertise in hybrid and private cloud environments ensures that optimisation strategies align with regulatory and security frameworks while delivering cost-effective results.

Conclusion

Tracking the performance of CI/CD pipelines in the cloud isn't just about gathering data - it's about transforming that data into actionable insights that enhance workflows and manage costs effectively. The metrics we've discussed, such as build times, deployment frequency, resource usage, and cost per deployment, serve as a solid starting point for making smarter decisions about your infrastructure.

To make monitoring truly effective, it's essential to use the right combination of tools and metrics. Whether you're focusing on key indicators like mean time to recovery or cloud-specific measures like auto-scaling efficiency, setting and regularly updating a baseline is crucial. This allows you to pinpoint bottlenecks before they disrupt productivity and uncover cost-saving opportunities that might otherwise remain hidden.

The real power of performance data lies in its ability to drive meaningful changes. It can expose over-provisioned resources, suggest more efficient scheduling patterns, or highlight dependencies that slow down your pipeline. These insights often lead to direct cost savings and improved efficiency.

Cloud environments offer unique advantages for CI/CD processes, but they also require tailored monitoring approaches. The dynamic nature of cloud resources can make traditional monitoring methods less effective. By implementing a robust system that tracks both performance and cost metrics, you create a feedback loop that continuously refines your deployment processes while keeping expenses in check.

For organisations aiming to maximise these benefits, collaborating with experts like Hokstad Consulting can be a game-changer. They specialise in turning performance data into actionable strategies for DevOps and cloud optimisation. With the right monitoring, data-driven improvements, and expert guidance, your CI/CD pipelines can operate efficiently while aligning with your cost management goals.

FAQs

What are the advantages of monitoring CI/CD pipeline performance using cloud-specific metrics?

Monitoring the performance of your CI/CD pipeline using cloud-specific metrics can bring a range of benefits. These metrics provide in-depth insights into each phase of the pipeline - whether it's build, test, or deployment - allowing teams to pinpoint bottlenecks and areas of inefficiency. With this knowledge, workflows can be fine-tuned, deployment times cut down, and the overall pace of software delivery improved.

Cloud-specific data also helps teams make smarter use of resources and scale operations effectively. This means they can adapt to fluctuating demands without sacrificing performance. The result? Faster, more dependable updates that keep businesses flexible and competitive in cloud-native environments.

How can I balance cost efficiency and performance in my cloud-based CI/CD pipelines?

To cut costs without sacrificing performance in your cloud-based CI/CD pipelines, the key lies in using resources wisely. Start with choosing the right compute instances for your specific workloads. For predictable tasks, consider using spot instances or reserved instances, which can significantly lower expenses. Automating tasks like pipeline execution and resource scaling is another way to reduce waste and save money.

You can also speed up your build and testing processes by introducing automated quality checks early in the pipeline. This not only reduces the need for manual input but also shortens deployment timelines. Incorporating auto-scaling and continuous monitoring helps ensure your pipelines only use the resources they actually need, avoiding unnecessary spending. Regularly reviewing performance metrics is crucial too - it helps you spot bottlenecks and make informed tweaks to keep everything running smoothly and efficiently.

How can I automate the monitoring of my CI/CD pipeline across multiple cloud platforms?

To keep an eye on your CI/CD pipeline in a multi-cloud setup, orchestration tools like Spinnaker or Argo CD can be a game-changer. They simplify workflows and make managing deployments across various cloud platforms much smoother, ensuring everything runs consistently and efficiently.

By connecting your CI/CD tools - such as Jenkins, GitLab CI, or CircleCI - with centralised monitoring solutions, you gain the ability to track performance, spot issues quickly, and uphold security standards. This integration offers continuous oversight, making it easier to fine-tune deployment cycles in the often-complex world of multi-cloud environments.