File system tuning is the process of adjusting storage configurations to improve performance and reduce costs in cloud environments. This involves optimising factors like I/O operations, block size, journaling, and caching. For example, businesses can achieve up to 50% cost savings by implementing proper tuning strategies. Key metrics to monitor include IOPS, throughput, and latency - each critical for different workloads, from small file operations to large data transfers.

UK businesses face unique challenges, including GDPR compliance, data sovereignty, and rising ransomware threats. Selecting the right file system and tuning techniques - like caching, scaling, and lifecycle management - can help balance performance, security, and costs. Tools like fio and iostat aid in monitoring and benchmarking, while automated policies ensure long-term efficiency.

To maximise benefits, businesses should regularly review configurations, align them with workload demands, and consider expert support for tailored solutions. For example, optimising cloud setups has saved some UK firms over £40,000 annually. Whether it’s improving latency for real-time apps or managing storage tiers, file system tuning is key to efficient cloud storage management.

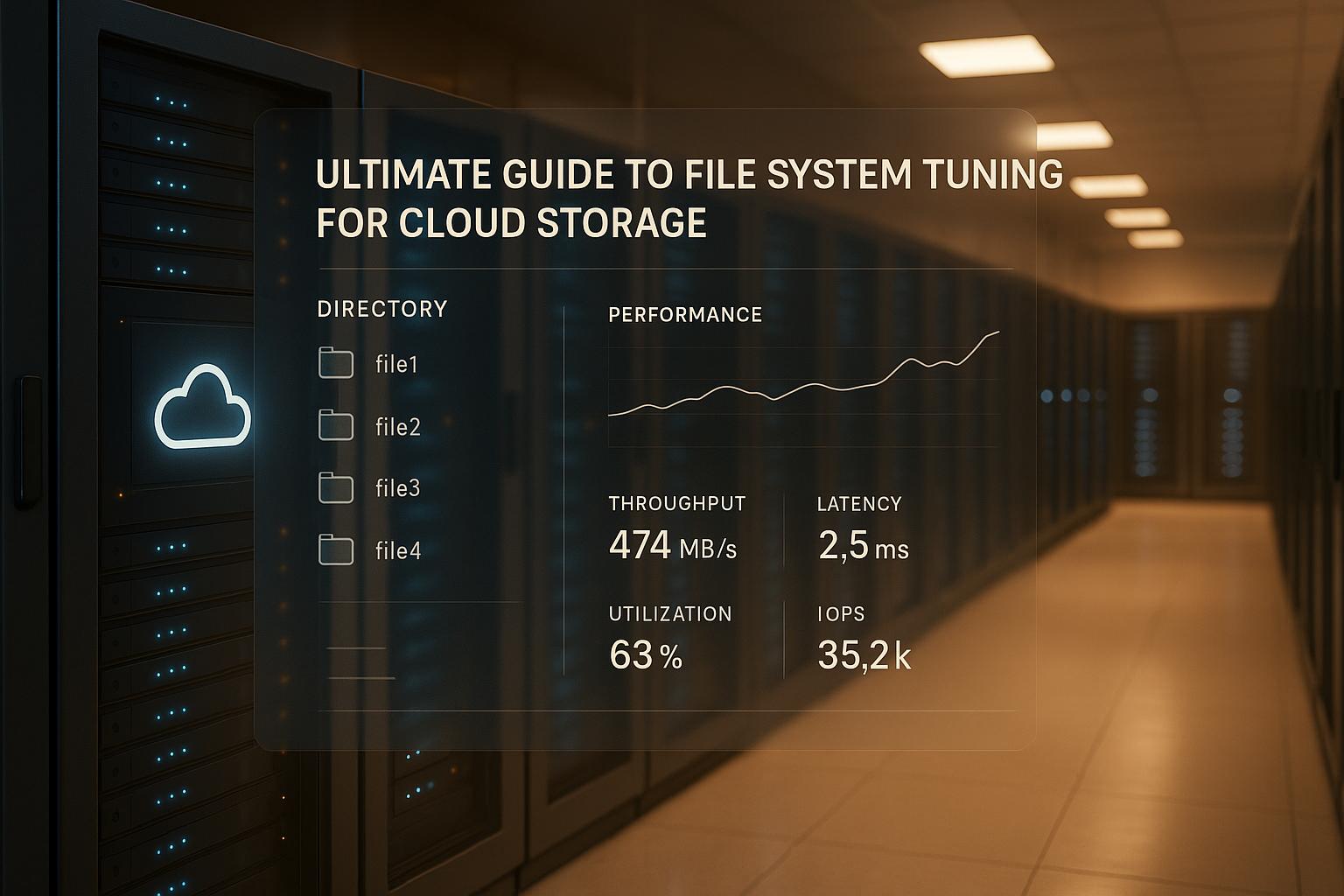

File System Performance Metrics for Cloud Storage

Performance Metrics: Throughput, IOPS, and Latency

Understanding key performance metrics like IOPS, throughput, and latency is essential when evaluating cloud storage systems. Here's a quick breakdown:

- IOPS (Input/Output Operations Per Second) measures the number of read or write operations per second. It's particularly useful for workloads involving small files or database operations [3].

- Throughput refers to the amount of data transferred per second, making it critical for large file transfers and streaming applications [3].

- Latency measures the time it takes for a system to respond to a request. Lower latency means faster responses, which is vital for real-time applications [3].

These metrics are interconnected. For instance, throughput increases with higher IOPS and larger block sizes [3]. Flash storage typically delivers more consistent latency compared to traditional spinning disks, where latency can vary depending on the physical location of the data [3]. When choosing a cloud storage solution, it's important to consider your specific workload and which metrics are most relevant [1].

| Metric | IOPS | Throughput |

|---|---|---|

| Measurement | Input/Output operations per second | (Mega-)Bytes per second |

| Meaning | Number of read/write operations | Volume of data transferred |

| Best for | Random I/O and small files | Sequential and large transfers |

| Limitations | Block size dependency | Less effective for random I/O |

Common Performance Bottlenecks

Performance bottlenecks can significantly impact response times, resource usage, and overall user experience [4]. Some common issues include:

- Network Delays and Bandwidth Limitations: These can restrict data transfer speeds, especially for applications with high throughput demands or many simultaneous users.

- Metadata Operation Delays: Metadata operations, such as listing directories or checking file attributes, can slow down when handling large numbers of files. For example, buckets with hierarchical namespaces can process up to eight times more initial queries per second than flat buckets [2].

- Caching Issues: Poor caching strategies lead to repeated data retrieval, increasing latency and wasting bandwidth.

- Architectural Constraints: High CPU or memory usage, inefficient database queries, and disk or network utilisation issues can all cause bottlenecks. These challenges evolve as systems grow, requiring ongoing monitoring and adjustments [5].

Tools for Measuring Performance

Measuring cloud storage performance effectively requires the right tools and methods. Benchmarking is a vital yet complex process due to the interaction of I/O devices, caches, kernel processes, and other system components [6].

Popular tools include:

- fio: A flexible I/O tester for simulating different workloads.

- iostat: Monitors system input/output device loading.

- Comprehensive Monitoring Platforms: These provide real-time alerts and detailed performance insights [3][7].

When selecting a benchmarking tool, consider your specific requirements and budget [7]. To ensure reliable results:

- Define clear performance and scalability goals before starting.

- Use workloads that closely resemble your actual use cases.

- Document your test environment and methodology for reproducibility.

- Continuously reassess performance as your needs evolve [8].

For businesses in the UK aiming to optimise their cloud storage systems, Hokstad Consulting offers expert services in cloud infrastructure tuning and cost management. Their expertise can help organisations achieve better performance while keeping expenses under control.

Cloud Performance 8.8 File Systems Tuning

File System Tuning Techniques

Improving cloud storage performance involves finding the right balance between speed, scalability, and cost. For businesses in the UK, achieving this balance is crucial, especially when dealing with diverse workloads. Below, we’ll explore practical approaches to optimise file systems for better results.

Caching Methods

Caching is a powerful way to boost file system performance by minimising the need to repeatedly fetch data from slower storage. Here are some effective caching strategies:

Local caching: This method stores frequently accessed data closer to the application, cutting down on latency and reducing bandwidth use. It’s particularly useful for read-heavy tasks where the same files are accessed repeatedly.

Memory-based caching: By keeping data in RAM, this approach delivers the fastest access times, making it ideal for applications like real-time analytics or interactive web services. However, it requires careful planning to avoid overloading memory resources.

Multi-tier caching: This strategy creates a hierarchy of storage types. Frequently used (hot) data stays in high-speed storage, less critical (warm) data moves to medium-speed storage, and rarely accessed (cold) data is stored in more economical long-term storage. This method balances speed and cost effectively.

To get the most out of caching, it’s essential to optimise cache size. This ensures a good balance between cache hit rates and resource usage. Once caching is fine-tuned, the next step is to scale file systems to meet growing demands.

Scaling Methods

Scaling is critical for managing workload growth, and cloud file systems offer several approaches to address this.

Vertical scaling: This involves upgrading a single server by adding more CPU, memory, or storage. It’s simple and works well for moderate growth but is limited by the hardware’s maximum capacity.

Horizontal scaling: This method spreads workloads across multiple servers, offering virtually unlimited capacity and improved fault tolerance. While effective, it requires robust infrastructure and adds complexity.

Diagonal scaling: Combining vertical and horizontal scaling, this approach provides flexibility to handle variable workloads. It’s a versatile option but demands careful planning and ongoing monitoring.

| Scaling Method | Description | Benefits | Limitations |

|---|---|---|---|

| Vertical Scaling | Adding capacity to a single server | Simple and direct | Limited by hardware, may cause downtime |

| Horizontal Scaling | Distributing workloads across multiple servers | Virtually unlimited capacity, fault-tolerant | More complex, requires strong infrastructure |

| Diagonal Scaling | Merging vertical and horizontal scaling | Flexible for changing workloads | Needs detailed planning and monitoring |

Scaling ensures systems can handle growth, but fine-tuning configurations can further enhance performance.

File System Configuration Settings

Proper configuration is the backbone of a well-performing file system. Here are some key settings to consider:

Data tiering: This automatically moves data between storage classes based on how often it’s accessed or its age. Frequently used data stays on high-performance storage, while older data shifts to more cost-effective options [11][12].

Compression and deduplication: These techniques reduce storage needs and speed up data transfers. Deduplication is particularly effective for organisations managing large amounts of repetitive data, like backups, as it eliminates duplicate copies, saving both storage space and bandwidth [9].

Auto-scaling: This feature dynamically adjusts resources based on demand. It ensures businesses don’t over-provision during quiet periods while maintaining enough capacity during peak times [9][10].

Right-sizing resources: By continuously monitoring usage patterns, businesses can adjust their resource allocations for optimal efficiency. Analytics tools can provide insights into usage trends and help fine-tune resource needs [9][10].

Data lifecycle management: This automates the movement of data between storage classes based on set policies. For instance, data that hasn’t been accessed for 30 days can be moved to low-cost archive storage, keeping high-performance storage available for active data [9].

For UK companies aiming to implement these techniques effectively, Hokstad Consulting offers expert guidance in cloud infrastructure and cost management. Their expertise helps organisations optimise performance while keeping costs under control across various cloud storage setups.

Cloud File System Solutions Comparison

Choosing the right cloud file system solution involves understanding how each provider excels in areas like performance, scalability, and management. Different solutions cater to diverse business needs and technical priorities, so weighing the options carefully is key.

Cloud File System Provider Comparison

Leading cloud providers bring unique strengths to the table, offering a mix of performance, integration, and cost benefits. Here's a closer look:

- Amazon S3: Known for its reliability and global reach, Amazon S3 offers a complex pricing system. With varied rates for storage classes, API calls, and data transfer, it's a great fit for organisations with strong technical expertise [15].

- Google Cloud Storage: Ideal for performance-intensive applications, it shines with fast data access and seamless integration within Google's ecosystem [15].

- OVHcloud Storage: A solid choice for UK organisations prioritising European data sovereignty, OVHcloud also provides competitive pricing within Europe [15].

- Backblaze B2: With the lowest storage costs, this is a favourite among budget-conscious, tech-savvy users [15].

- Azure Blob Storage: Perfect for businesses already invested in Microsoft's ecosystem, it offers strong integration and flexible pay-as-you-go pricing with enterprise discounts.

| Provider | Best For | Key Strength | Pricing Model |

|---|---|---|---|

| Amazon S3 | Enterprise reliability | Complex storage classes, global reach | Variable rates for storage, API calls, egress |

| Azure Blob Storage | Microsoft-focused businesses | Ecosystem integration | Pay-as-you-go with enterprise discounts |

| Google Cloud Storage | Performance-sensitive applications | Speed and Google integration | Competitive pricing with discounts |

| Backblaze B2 | Cost-conscious technical users | Lowest raw storage costs | Simple, transparent pricing |

| OVHcloud Storage | UK organisations | European data sovereignty | Competitive European pricing |

While selecting a provider is crucial, the management model you choose will also significantly impact your operations.

Self-Managed vs. Fully Managed File Systems

The decision between self-managed and fully managed file systems affects everything from operational control to resource demands.

Self-Managed Systems: These require organisations to handle setup, security, and maintenance themselves. While this approach offers full customisation and avoids vendor lock-in, it demands skilled IT teams and considerable time investment [13][14]. Scaling can also be tricky, as it involves manual configuration and significant upfront costs for infrastructure and licences [13].

Fully Managed Solutions: These offload operational tasks - like infrastructure setup, software updates, monitoring, and backups - to the provider. This allows businesses to focus on applications and strategy [14]. As Brad Kilshaw, Founder of Nivel Technologies, notes:

DigitalOcean's Managed Databases have been a game-changer. They've done a fantastic job of removing the complexity of setting up, tuning, and securing databases for production use.[13]

Fully managed systems come with benefits like automatic scaling, advanced security, and 24/7 support, but they typically cost more and offer less control over the infrastructure [14].

Here’s how the two models compare:

- Cost: Self-managed systems may save money but require an in-house IT team, while fully managed services charge more for their convenience and comprehensive support [14].

- Security: Self-managed systems place full responsibility on the organisation, whereas fully managed solutions operate under a shared security model [14].

- Scalability: Fully managed services automatically scale resources, while self-managed systems rely on manual adjustments [14].

Your choice will shape how well your architecture adapts to your performance needs.

How Architecture Affects Tuning

The architecture you choose plays a critical role in optimising performance. Modern cloud systems eliminate traditional hardware constraints, offering flexibility, scalability, and cost efficiency [16].

- Geographic Distribution: By spreading data centres across regions, latency is reduced, and user experiences improve [16].

- Dynamic Resource Allocation: Cloud systems can adjust resources in real time, ensuring smooth performance during demand spikes [16].

- Advanced Monitoring: Tools provided by cloud platforms allow tracking of metrics like response times and resource usage, enabling smarter optimisation [16].

Adopting modern cloud architectures has shown measurable benefits. For example, organisations report 40% faster deployment cycles and 35% lower operational costs. Innovations like container management boost deployment efficiency by 55%, while auto-scaling reduces infrastructure expenses by 45% [17]. Additionally, edge computing has emerged as a major trend, cutting latency by 80% and improving user experience by 65% for global applications [17].

For UK businesses, evaluating these architectural options is critical. Hokstad Consulting, for instance, helps organisations understand how different cloud architectures impact tuning and implement strategies tailored to their needs.

Aligning your architecture with your optimisation strategies is key to achieving long-term performance and cost savings.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Long-Term Performance and Cost Management

Keeping systems running smoothly while managing expenses requires a thoughtful approach that grows with your business. The secret lies in setting up systems that adjust automatically to shifts in data patterns and usage demands, reducing the need for constant manual tweaks.

Automated Monitoring and Audits

Ongoing monitoring plays a crucial role in ensuring long-term performance. Today’s cloud platforms can track storage usage, data access frequency, and performance metrics in real time. These tools can detect performance dips before users even notice and flag areas where costs may be creeping up unnecessarily.

Automated alerts on key metrics like throughput rates, latency spikes, and storage usage trends allow teams to respond quickly to potential problems. Regular automated audits are also vital - they can pinpoint files sitting idle for long periods, offering opportunities to trim costs.

To stay ahead, it’s important to set performance baselines early on and revisit them every quarter. This helps differentiate between normal variations and actual problems. Automated reports summarising cost and performance trends can keep stakeholders informed and support better decision-making.

Once monitoring is in place, the focus can shift to applying smart data management strategies to keep costs under control.

Cost Management Methods

Good monitoring doesn’t just maintain performance - it also supports timely adjustments to manage expenses. One of the best ways to strike a balance between cost and performance is by using automated data lifecycle management policies. These policies move older, less-accessed data to lower-cost storage tiers, cutting costs without compromising availability when the data is needed [18][19].

To make these policies work, you need a solid understanding of how your data is accessed and any compliance requirements.

Another area to watch is versioning and replication. Without careful planning, organisations can end up with unnecessary file versions or excessive backups, leading to ballooning storage costs [18]. Regular reviews of these policies ensure they match actual business demands rather than worst-case scenarios.

However, be cautious with archiving strategies. Accessing data from lower-cost archive tiers can come with high retrieval fees [18]. Analysing access patterns beforehand helps avoid surprises. Many cloud providers offer built-in cost management tools that provide detailed insights into storage spending and help identify areas for savings [18].

Building a cost-conscious culture across your organisation is equally important. Engineers, developers, and data scientists should understand how different storage classes and API calls affect expenses.

Simply storing data isn't enough; efficiently managing it requires discipline.[18]

Aligning Tuning with Business Needs

Your file system configurations should evolve as your business grows. A setup that works for a small startup might not meet the needs of a larger organisation dealing with complex compliance requirements. Regularly reviewing and updating configurations ensures they keep pace with changing workloads and regulations.

As your applications mature and your user base grows, performance needs may shift. For instance, a system optimised for batch processing might need reconfiguration if real-time analytics become a priority. Similarly, expanding into new markets might require adjustments to data distribution and caching strategies.

Compliance is another factor to consider. New regulations or entering international markets - especially for UK businesses - can bring data sovereignty issues that might require architectural changes to your file system.

Striking the right balance between cost and performance is key. Regular cost–benefit analyses can help decide whether premium storage options are worth it or if alternative setups could achieve similar results at a lower cost.

For UK businesses looking to optimise both performance and cost, expert advice can make a big difference. Hokstad Consulting, for example, specialises in cloud cost engineering, helping organisations reduce cloud expenses by 30–50% without sacrificing performance. Their services include in-depth cloud cost audits and ongoing strategy development to ensure long-term efficiency.

The best organisations treat file system tuning as a continuous process rather than a one-off task. By combining robust monitoring, smart cost management strategies, and regular reviews, you can build storage systems that deliver high performance and scale effortlessly as your business grows.

Conclusion

Fine-tuning your file system for cloud storage can directly enhance operational efficiency and reduce costs. For instance, BentoML managed to cut large language model (LLM) loading times from over 20 minutes to just a few minutes by switching to JuiceFS [21]. Improvements like these aren't just technical wins - they translate into measurable cost savings, whether you're handling machine learning workloads or traditional data processing.

Effective file system tuning is about finding the balance between performance and cost [20]. It’s not simply a case of choosing the fastest or the cheapest solution; it’s about identifying what works best for your specific needs. Understanding your data - whether it’s frequently accessed (hot

) or rarely accessed (cold

), its access patterns, and performance demands - is key to making informed choices that save money while meeting user expectations [20].

As discussed earlier, using hierarchical namespace buckets can significantly boost query performance, while file caching combined with parallel downloads can make model load times up to nine times faster [2]. These aren’t minor tweaks - they represent game-changing improvements that can redefine how your applications perform.

While standard storage rates may appear competitive, real savings come from smart data management. Techniques like AWS S3 Lifecycle policies, employing compression methods such as Zstandard or Brotli, and selecting the right storage tier based on access frequency can substantially lower storage costs [20]. Aligning these file system tuning strategies with cost management ensures long-term benefits in cloud storage efficiency.

For sustained optimisation, continuous monitoring and flexibility are essential. Cloud cost optimisation must also consider operational, compliance, security, and budgetary factors to achieve peak performance at minimal cost [22]. For businesses in the UK, this includes addressing data sovereignty, GDPR compliance, and local market conditions when designing your file system architecture.

Whether you’re managing a massive dataset - like the 3 trillion tokens in 01.AI’s latest project [21] - or handling everyday business data, the principles remain the same: understand your data, choose the right tools, and keep refining your approach. The examples and techniques shared in this guide provide a solid foundation, but the real value lies in applying them consistently and tailoring them to your unique needs.

For expert advice and tailored solutions, reach out to Hokstad Consulting. Let their expertise help you unlock the full potential of your cloud storage.

FAQs

How does file system tuning help UK businesses save money on cloud storage?

File system tuning offers a smart way for UK businesses to cut down on cloud storage expenses while boosting data efficiency. By fine-tuning aspects like cache management, block sizes, and tiered storage, companies can streamline operations and avoid unnecessary costs.

Take, for instance, consolidating small files into larger ones or implementing caching mechanisms. These steps can speed up data retrieval and reduce bandwidth consumption. The result? Faster performance and lower storage and transfer costs. With these tweaks, businesses can handle their cloud resources more efficiently, keeping budgets in check while improving overall system performance.

What are the main differences between vertical, horizontal, and diagonal scaling in cloud file systems, and when should you use each?

When we talk about vertical scaling, it’s all about boosting the resources of a single server - think more CPU power or extra memory. This method works well for tasks that demand extra processing capacity but are restricted by the physical limits of the hardware.

On the other hand, horizontal scaling takes a different route. Instead of upgrading a single server, you add more servers or nodes to share the workload. This is a great solution for managing increasing demands over time, especially for tasks that can be split across multiple systems.

Then there’s diagonal scaling, which blends the best of both worlds. It allows you to enhance server resources while also adding new servers when needed. This hybrid approach is ideal for environments where workload demands can vary significantly.

What are IOPS, throughput, and latency, and how do they impact the performance of cloud storage solutions?

IOPS, Throughput, and Latency: Key Metrics for Cloud Storage Performance

When evaluating cloud storage systems, three performance metrics stand out: IOPS (Input/Output Operations Per Second), throughput, and latency. Together, they offer a clear picture of how efficiently the system operates.

IOPS: This metric tracks how many read and write operations a storage system can handle each second. It’s particularly important for workloads involving frequent, smaller tasks, such as database queries or transaction processing.

Throughput: Throughput measures the volume of data transferred per second, making it a critical factor for applications dealing with large datasets, like video streaming or big data analytics.

Latency: Latency reflects the time delay before a data transfer begins. Essentially, it shows how quickly the system responds to a request, which is crucial for ensuring smooth user experiences.

These metrics are closely linked and can influence each other. For instance, while high IOPS can enhance responsiveness, it might lead to delays if the system becomes overloaded. Similarly, high throughput could be hindered by excessive latency. Striking the right balance between these factors is key to achieving fast, dependable performance. This balance not only ensures applications run smoothly but also helps businesses manage costs effectively.