Helm and CI/CD pipelines simplify Kubernetes deployments, making them faster, consistent, and error-free. By automating the deployment process, you can reduce manual errors, save time, and manage resources effectively. For UK businesses, this approach also supports compliance with regulations like GDPR while cutting cloud costs by up to 50%.

Key takeaways:

- Helm: A Kubernetes package manager that uses reusable charts for easy deployment and rollback.

- CI/CD: Automates testing and deployment, reducing errors and improving productivity.

- Integration: Helm and CI/CD together ensure consistent deployments, support GitOps practices, and enable quick rollbacks.

- Cost savings: Use autoscaling, spot instances, and resource cleanup to optimise cloud expenses.

- UK compliance: Automate security testing and maintain audit trails to meet GDPR and other regulations.

This article covers setting up Helm with CI/CD, best practices for Helm charts, and tips for managing costs and reliability.

Building a Kubernetes CI/CD Pipeline with GitLab and Helm

Setting Up the Environment

Getting your environment ready with the right tools and configurations is crucial. It saves time, avoids headaches, and helps keep your system secure.

Prerequisites for Integration

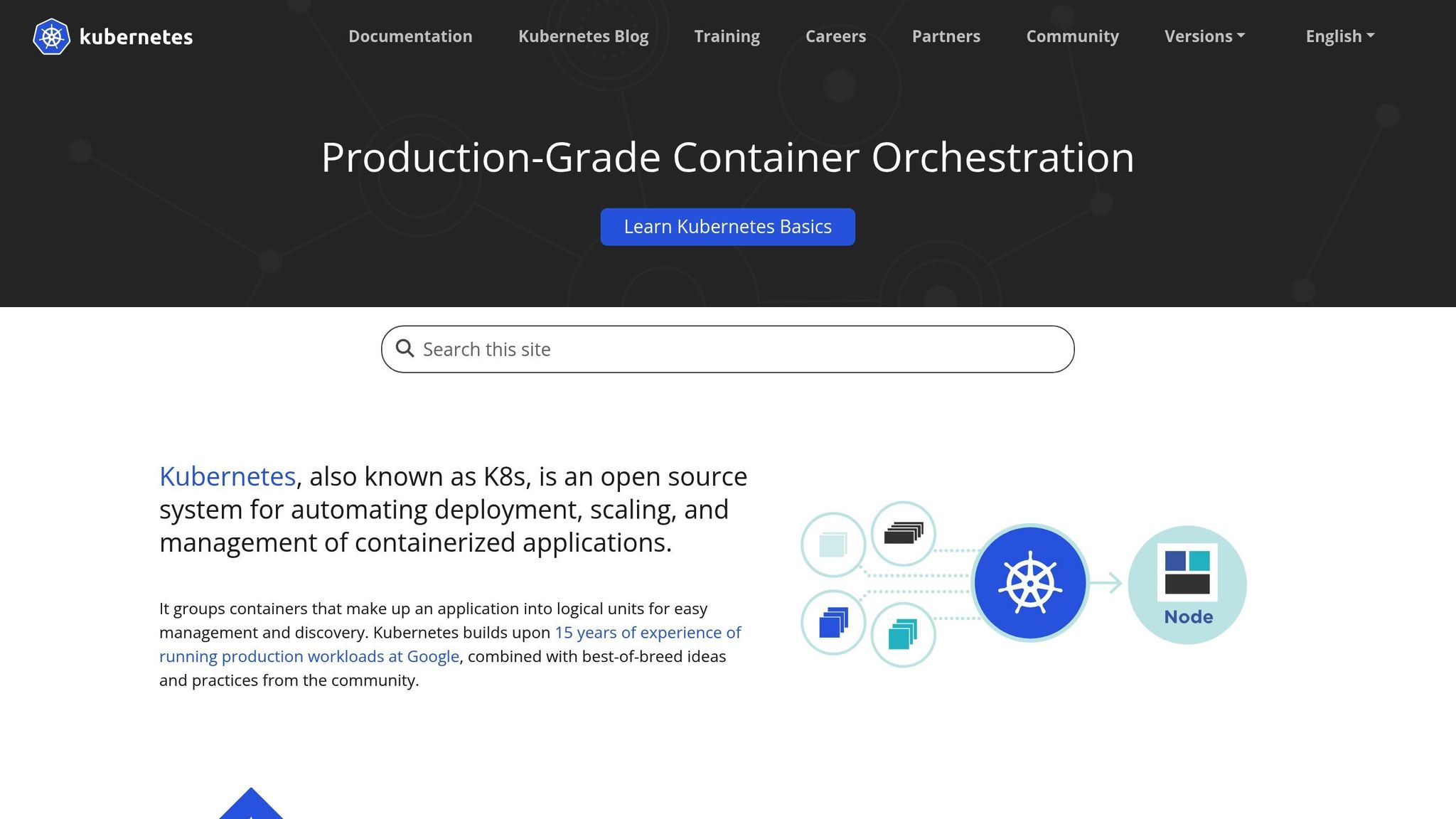

For a smooth Helm CI/CD integration, several components need to work together seamlessly. First, ensure you have a functional Kubernetes cluster. This could be hosted on platforms like AWS EKS, Google GKE, Azure AKS, or even set up on-premises.

Next, install and configure Helm on your local machine. Helm simplifies deploying applications to your Kubernetes cluster, making it an essential tool.

You'll also need to pick a CI/CD platform that fits your workflow. Options include GitLab CI, CircleCI, Jenkins, or Azure DevOps. A container registry is equally important for storing Docker images. Popular choices are Azure Container Registry, Docker Hub, or AWS ECR. Ensure this registry is accessible from both your CI/CD environment and your Kubernetes cluster.

To enhance security, integrate container image scanning tools. These help identify vulnerabilities before deployment [1]. With these elements in place, you can move on to configuring your CI/CD environment.

Configuring the CI/CD Environment

Start by setting up a dedicated Git repository for your Helm charts and CI/CD configurations. This repository acts as the single source of truth, aligning with GitOps principles.

Deploy CI/CD runners within your Kubernetes cluster for better performance. For instance, you can use Helm to deploy GitLab Runner. Make sure to use a ServiceAccount with restricted RBAC permissions for added security, and manage CI/CD variables like base64-encoded Kubeconfig files securely.

GitHub Actions can simplify Kubernetes deployments by offering prebuilt components to build Docker images, push them to registries, and install Helm charts. As your projects and environments grow, consider using pull-based GitOps tools like Argo CD or Flux CD to scale effectively.

Security should also be a priority. Automate security testing by incorporating tools that scan container images for vulnerabilities. Utilities like Kube-Linter can help identify misconfigurations in Kubernetes manifests. Once your CI/CD environment is secure, it's time to address compliance requirements specific to the UK.

UK-Specific Compliance Requirements

In the UK, secure CI/CD configurations must also meet strict data protection and auditing standards. The Data Protection Act 2018, which enforces GDPR compliance, is the cornerstone of these regulations [4]. Your CI/CD pipeline must handle personal data carefully and maintain detailed audit trails.

Encrypt data both in transit and at rest, use secure protocols like SFTP and SSH, and ensure audit logs are detailed and accessible. Implement robust user authentication measures, such as two-factor authentication, to strengthen security.

If your organisation deals with large amounts of personal data, appoint a Data Protection Officer (DPO) to oversee CI/CD processes and ensure compliance with UK laws [4].

Cost management is another factor to consider. Cloud infrastructure costs can spiral if not managed properly. Efficient CI/CD practices can help by minimising deployment times and preventing unnecessary resource usage.

Regular audits of your CI/CD setup are essential. These should cover deployment processes, credential management, and data handling practices [4]. If your organisation operates critical infrastructure, the Network and Information Systems Regulations 2018 (NIS Regulations) may also apply. These regulations require additional security measures and incident-reporting protocols [4].

Proper credential management is crucial. Store credentials securely using environment variables or vaults, rotate them regularly, and enforce multifactor authentication for administrative access.

Lastly, logging and monitoring are indispensable. Your CI/CD pipeline should produce detailed audit logs that record who deployed what, when, and from where. These logs should be retained in line with UK data retention rules.

With your environment set up and compliance measures in place, you're ready to start building and customising Helm charts for seamless integration into your automated deployment pipeline.

Creating and Customising Helm Charts

Building Helm charts that are both effective and easy to maintain starts with understanding their structure and following best practices. A well-organised chart simplifies deployment automation and reduces maintenance headaches over time.

Helm Chart Structure Explained

A Helm chart consists of a specific directory structure containing all the elements needed for deploying an application on Kubernetes. It defines how your application should operate within the cluster.

At the root of the chart, the Chart.yaml file provides metadata such as the chart's name, version, a description, and the application version it supports. If you're targeting Helm 3, set the apiVersion field to v2. Additionally, you can specify the supported Kubernetes versions using the kubeVersion field, following semantic versioning rules.

The values.yaml file contains default configuration values, like image repositories, resource limits, and environment-specific settings. These defaults can be overridden at installation, allowing the chart to adapt to different environments.

Inside the templates/ directory, you'll find Kubernetes manifest templates written in Go templating. These templates, combined with the values file, generate valid Kubernetes resource definitions. Common files here include deployment, service, and ingress templates, along with a _helpers.tpl file for reusable template functions.

Here’s a typical Helm chart structure:

| File/Directory | Purpose |

|---|---|

Chart.yaml |

Contains metadata like name, version, and dependencies |

values.yaml |

Defines default configuration values for templates |

templates/ |

Holds Kubernetes manifest templates written in Go templating |

charts/ |

Stores subcharts for related applications |

crds/ |

Contains static Custom Resource Definitions |

.helmignore |

Specifies files to exclude when packaging the chart |

The charts/ directory is used for subcharts that support the main application (e.g., a database for a web app), while the crds/ directory is for static Custom Resource Definitions that must remain unaltered.

Understanding this structure is just the first step. To create charts that are both flexible and reliable, you’ll need to follow a few key best practices.

Best Practices for Helm Chart Creation

Adopting these practices can make your Helm charts easier to maintain and scale:

Keep charts modular and focused. Instead of creating one large chart for an entire application, break it into smaller, purpose-specific charts. This approach simplifies testing, debugging, and reusing components.

Use library charts for shared components. If you find yourself repeating templates across multiple charts, consolidate them into a library chart. For example, shared sidecars or init containers can be managed centrally, applying the DRY (Don't Repeat Yourself) principle.

Separate values files for environments. Create distinct values files (e.g.,

values-dev.yaml,values-staging.yaml,values-prod.yaml) for each environment. This setup ensures smooth transitions between environments while keeping configurations clear.Write efficient templates. Use Go templating effectively by quoting strings but leaving integers unquoted. The

includefunction allows you to embed templates, while therequiredfunction ensures critical values are not missed.Test thoroughly. Start with

helm lintto catch common errors, then usehelm testto validate the chart's behaviour under different conditions.Provide clear interfaces. Your charts should offer a straightforward way for users to customise settings without delving into the chart's internals. As noted in Design Patterns: Elements of Reusable Object-Oriented Software:

The design of reusable libraries should provide clear and simple interfaces, allowing users to interact with them without needing to understand their internal details.

By following these practices, you’ll ensure your charts are not only functional but also user-friendly and adaptable.

Maintaining and Updating Charts

Regular maintenance is essential to keep your Helm charts secure and compatible with evolving Kubernetes standards. Here’s how to stay on top of updates:

Use semantic versioning and maintain a changelog. Follow the major.minor.patch format to signal the impact of updates. For example, major versions indicate breaking changes, minor versions add features, and patches address bugs. A compatibility matrix can help track dependencies and changes.

Automate updates with CI/CD pipelines. Incorporating tools like Flux or Argo CD into your CI/CD processes can streamline updates, reduce errors, and support features like automated testing, canary deployments, and rollbacks.

Manage dependencies effectively. Use Helm's dependency commands to handle subchart relationships. Tools like Helmfile can help manage complex dependencies across multiple charts.

Scan for vulnerabilities. Regularly check charts, images, and configurations using tools like Trivy, Clair, or Anchore Engine to ensure security.

Provide detailed documentation. Include README files, usage examples, and troubleshooting guides. Clear documentation lowers support demands and encourages wider usage.

Finally, keep an eye on Kubernetes API changes. As older APIs are deprecated, updating your charts ensures they remain functional and aligned with the latest Kubernetes standards.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Adding Helm to CI/CD Pipelines

Bringing Helm into your CI/CD pipelines can turn tedious manual deployments into smooth, automated workflows. This integration helps ensure your Kubernetes applications are deployed consistently across different environments, reducing both errors and deployment times.

Automating Helm Commands in Pipelines

The backbone of effective Helm automation is scripting the right commands at each pipeline stage. Most CI/CD workflows follow a common structure: build the application, package it into a container, push it to a registry, and then deploy using Helm.

A streamlined workflow typically starts by determining whether a fresh install or an upgrade is required. Instead of hardcoding these decisions, use a script to automatically detect the state of the release and execute the appropriate Helm command. This avoids issues like trying to install a release that already exists.

When triggered, the pipeline handles the container build, pushes it to a registry, and runs Helm commands with updated parameters to deploy the latest application version using the newest chart release [3].

For instance, a Site Reliability Engineering (SRE) team used GitHub Actions and Helm to manage microservice deployments with custom runners and parameterised configurations [2]. Tools like CircleCI orbs can also simplify pipeline setups by bundling common Helm tasks [3]. Additionally, separating chart validation from deployment tasks - by running commands like helm lint and helm template earlier in the pipeline - can catch configuration issues before they escalate.

This level of automation ensures deployments remain consistent across all environments.

Managing Secrets and Environment Variables

Once automation is in place, securing sensitive information becomes a top priority. Proper management of secrets in Helm-based CI/CD pipelines requires careful planning and the right tools.

Avoid storing sensitive data, like API keys or passwords, in plain text within your repositories or pipeline configurations. Instead, use encrypted environment variables in line with twelve-factor principles [5].

For advanced secret management, external tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault are excellent options. These tools provide features like automatic secret rotation and audit logging. For example, an SRE team used custom scripts in GitHub Actions to fetch secrets from a dedicated manager, keeping sensitive information secure and separate from the codebase [2].

Rotating secrets regularly, such as updating access keys, further strengthens security. Applying the principle of least privilege ensures users and applications only access what’s necessary for their roles. Monitoring and auditing secret access can help detect and address anomalies quickly.

For Helm-specific secret management, tools like helm-secrets or the External Secrets Operator are valuable. The helm-secrets plugin allows you to encrypt sensitive data or reference cloud-managed secrets within your values files. Meanwhile, the External Secrets Operator fetches secrets from external systems without embedding them directly in Helm templates.

CI/CD Tools for Helm Integration

With automation and security in place, choosing the right CI/CD platform is the next step. Several tools are well-suited for Kubernetes deployments and integrate seamlessly with Helm:

| Tool | Key Strengths | Helm Integration Features |

|---|---|---|

| Jenkins | Extensive plugin ecosystem | Dedicated Helm plugins and flexible pipeline scripting |

| GitLab CI | Built-in Git repository and CI/CD | Kubernetes integration and Helm chart deployment templates |

| GitHub Actions | Deep GitHub integration | Helm-specific actions, secure secret management, custom runners |

| Tekton | Kubernetes-native, cloud-agnostic | Smooth integration with Helm commands |

| CircleCI | Fast execution, Docker-focused | Simplified configuration with Helm orbs |

Jenkins, being widely used, offers a robust open-source solution for end-to-end CI/CD pipelines [6]. Tekton runs entirely within Kubernetes clusters, removing the need for external CI/CD infrastructure [6]. GitLab combines source control with CI/CD capabilities, and you can even deploy GitLab on Kubernetes using Helm to maintain consistency [6].

When building your CI/CD workflows, decide between push-based and pull-based models. Push-based workflows require your CI/CD platform to have certificates and credentials to access the Kubernetes cluster. In contrast, pull-based workflows, such as those enabled by GitOps tools like Argo CD, use an in-cluster agent and Git repositories as the single source of truth [1][2].

To optimise Helm integration, you can set Kubernetes resource quotas, create dedicated namespaces, and enable autoscaling to handle peak pipeline usage [6]. Enhancing your CI/CD framework with best practices - like scanning container images for vulnerabilities, implementing rollback mechanisms, and using immutable image tags - further strengthens your deployment process [1].

Cost and Reliability Optimisation with Automation

By leveraging CI/CD integration and Helm chart practices, UK businesses can transform their approach to managing cloud infrastructure. Automation through tools like Helm and CI/CD pipelines not only helps to cut unnecessary costs but also ensures consistent and reliable deployments. This combination lays the groundwork for reducing waste, avoiding downtime, and achieving measurable savings.

Reducing Cloud Costs with Automation

When it comes to cutting Kubernetes infrastructure costs, automation is a game-changer. The secret lies in dynamic resource management - allocating resources based on actual demand instead of static configurations.

One of the key strategies is autoscaling and rightsizing. Horizontal Pod Autoscaling (HPA) adjusts the number of pods dynamically based on CPU or memory usage, while Vertical Pod Autoscaling (VPA) fine-tunes resource requests for individual pods. Together, they prevent overprovisioning, which is one of the biggest culprits of wasted resources.

For workloads that can tolerate interruptions, spot instances are a cost-effective option. Amazon EKS spot instances, for example, can slash costs by up to 90%, and GKE Spot VMs offer similar savings of up to 91% [7][9]. For UK businesses running batch jobs or development environments, these savings can make a noticeable difference in annual budgets.

Another important practice is automated resource cleanup. By integrating scripts into your CI/CD pipelines to identify and remove unused resources - like persistent volumes, load balancers, or idle compute instances - you ensure you’re only paying for what your applications actively use.

A real-world example of this is IronScales, which reduced its Kubernetes costs by 21% while maintaining service-level agreements (SLAs) by collaborating with experienced partners [8]. Their success underscores how automation can simultaneously lower expenses and enhance reliability.

To maintain even tighter control, resource quotas and namespace management offer a way to set precise limits on CPU, memory, and storage usage within specific namespaces. This approach is particularly effective in multi-tenant environments, where runaway processes could otherwise lead to unexpected bills.

These cost-saving measures also naturally improve deployment reliability, as we’ll explore next.

Improving Deployment Reliability

Reliable deployments depend on automated processes that validate, test, and roll back changes when necessary. These safeguards ensure issues are caught before they can disrupt production systems.

Automated testing and validation should be baked into every stage of your CI/CD pipeline. Running configuration checks and manifest validation before deploying code helps maintain stability and prevent errors from creeping into live environments.

Using CI/CD with Kubernetes allows you to automate deployments, rapidly scale your services, and be confident that all live code has passed your test suite[1] - James Walker, Founder, Heron Web.

Another best practice is using immutable image tags - such as commit SHAs instead of generic tags like 'latest'. This ensures deployments are reproducible and makes rolling back to a previous version straightforward.

GitOps and drift detection are essential for maintaining consistency between your desired and actual system configurations. By storing infrastructure configurations in source control and using automated tools to detect changes or drift in the live cluster, you can quickly identify and resolve unauthorised changes or mismatched settings.

When things do go wrong, rollback mechanisms act as a safety net. By setting up automated rollback triggers tied to system health indicators, your CI/CD pipeline can revert to a stable version without requiring manual intervention.

Finally, environment-specific validation ensures that deployments meet the unique requirements of each stage - whether it’s development, staging, or production. Using separate Helm values files for each environment, along with automated testing, helps verify functionality at each step before moving forward.

Expert Consulting Services

For UK businesses looking to maximise the benefits of automation, expert consulting services can provide tailored solutions to optimise costs and reliability.

Hokstad Consulting specialises in helping businesses streamline their DevOps processes and reduce cloud infrastructure expenses. Their services often deliver savings of 30–50% while improving deployment speed and reliability. They go beyond basic adjustments, offering advanced cost management strategies like automated resource rightsizing, spot instance optimisation, and custom monitoring tools that give real-time insights into spending patterns.

What sets Hokstad Consulting apart is their performance-based fee model. You only pay when actual cost reductions are achieved, with fees capped at a percentage of the savings delivered. This ensures their goals align directly with yours.

Their strategic cloud migration services help businesses transition to more cost-efficient architectures with minimal disruption. This includes migrating applications to Kubernetes, implementing Helm-based deployment strategies, and setting up automated scaling policies that match resources to demand.

For ongoing support, Hokstad offers a retainer model that provides flexibility for infrastructure monitoring, performance optimisation, and security audits. This ensures your automated systems remain efficient and adaptable as your business evolves.

Conclusion

Integrating Helm with CI/CD has transformed how UK organisations manage Kubernetes deployments, offering a blend of speed, consistency, and cost savings. This powerful combination simplifies the challenges of scaling and managing containerised applications.

By automating deployments, teams can release updates faster and collaborate more effectively, moving closer to continuous delivery. Helm's reproducible packaging ensures consistent environments, while CI/CD automation minimises manual errors and shortens feedback cycles, creating a smoother development process [10][11].

The financial benefits are just as compelling. Automation can reduce cloud costs by 30–50%, thanks to smarter resource management, automated scaling, and less reliance on manual intervention [11].

Reliability also gets a boost. Automated rollbacks and version-controlled deployments ensure stability, while GitOps practices provide the audit trails needed to meet UK compliance standards [11].

To make the most of these advantages, start with team training and a phased approach to automation. Expert guidance can ease the transition and maximise the benefits. For tailored support, Hokstad Consulting offers expertise in optimising DevOps workflows and cutting cloud expenses.

When paired, Helm and CI/CD become powerful tools, enabling businesses to innovate confidently while maintaining control over costs and ensuring operational reliability.

FAQs

How does using Helm in CI/CD pipelines improve Kubernetes deployments?

Integrating Helm into your CI/CD pipelines simplifies Kubernetes deployments by automating routine tasks, cutting down on errors, and maintaining consistency across different environments. With Helm charts, managing complex applications becomes much easier, allowing for seamless updates and effortless scaling of services.

Using Helm also fosters better collaboration within teams. Standardised and predictable configurations streamline workflows, speeding up deployment cycles while improving reliability. This ensures your Kubernetes applications are rolled out efficiently and with reduced risk.

How can I ensure compliance with UK standards when automating Kubernetes deployments using Helm?

To align with UK standards when automating Kubernetes deployments with Helm, it's crucial to adopt robust security measures. Start by enabling Role-Based Access Control (RBAC) to manage permissions effectively, configure network policies to control traffic flow, and ensure detailed audit logs are maintained for tracking activities. Regular updates to Helm charts, combined with verifying their sources, are also key steps to reduce the risk of vulnerabilities.

Using GitOps workflows can bring consistency and transparency to your deployment process, making it easier to trace changes. Additionally, conducting regular security scans allows you to identify and address risks before they escalate. These practices help create a Kubernetes environment that is both secure and compliant with UK-specific requirements.

How can businesses optimise cloud costs and ensure reliable deployments using Helm and CI/CD automation?

Businesses can manage cloud expenses effectively and maintain dependable deployments by incorporating Helm charts into their CI/CD pipelines. This method streamlines intricate Kubernetes deployments, reduces the likelihood of manual mistakes, and enables continuous testing and rollback options, boosting overall operational efficiency.

For optimal outcomes, it's worth implementing practices like GitOps workflows, container image scanning, and automated rollback mechanisms. These techniques ensure consistent deployments, strengthen security measures, and reduce wasteful resource use - leading to better cost management and more reliable operations.