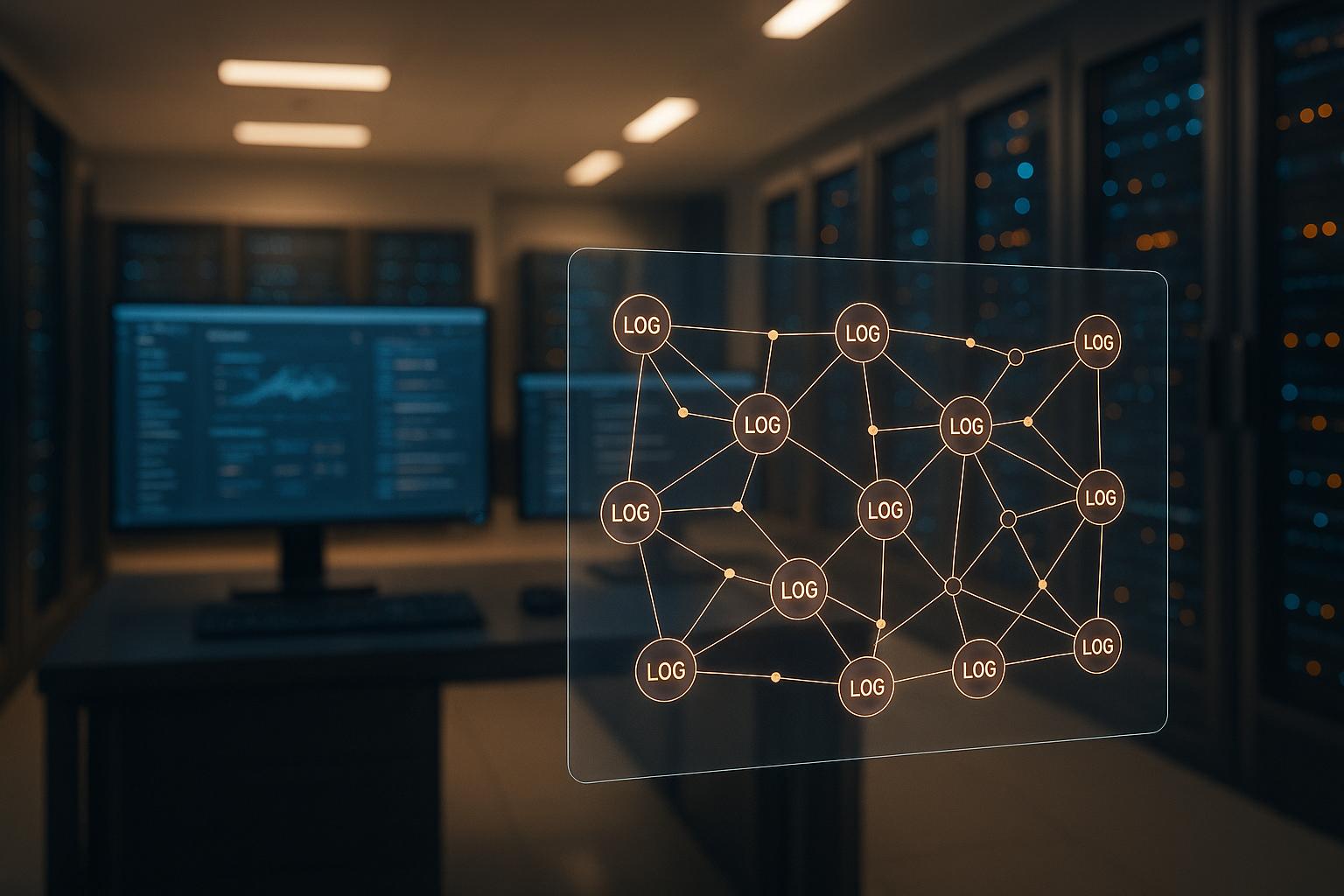

Managing logs in Kubernetes is tricky because containerised workloads generate logs across multiple nodes and services. Without centralised log aggregation, troubleshooting becomes time-consuming, and storage costs can escalate. For UK businesses, GDPR adds another layer of complexity, requiring strict control over log data to avoid fines of up to £17.5 million.

To simplify Kubernetes log management and reduce costs, two main methods are commonly used:

- Node-Level Logging Agents: Deployed as DaemonSets, these agents collect logs from all containers on a node, forwarding them to centralised storage. Tools like Fluentd, Fluent Bit, and Filebeat are popular for this approach due to their efficiency and compatibility with

kubectl logs. - Sidecar Logging Containers: Added to individual pods, these containers provide more control for applications with unique logging needs but consume more resources.

Key practices include centralising logs, using standardised formats like JSON, automating log rotation, and securing data with encryption and role-based access controls. Tools such as Fluentd, Fluent Bit, Filebeat, and Logstash offer different capabilities, balancing resource usage and functionality.

For UK organisations, careful log management ensures compliance with GDPR while keeping cloud costs in check. Strategies like tiered storage, log sampling, and automated cost monitoring can further optimise expenses. To streamline your Kubernetes logging setup, consider integrating tools with SIEM platforms and automating configurations with IaC tools like Terraform.

Quick Comparison:

| Method | Deployment | Resource Usage | Customisation | Ease of Use |

|---|---|---|---|---|

| Node-Level Logging Agents | DaemonSet per cluster | Low | Cluster-wide configuration | High (kubectl compatible) |

| Sidecar Logging Containers | One container per pod | High | Pod-level configuration | Moderate |

Effective log aggregation improves visibility, reduces downtime, and ensures compliance - all while controlling costs.

Kubernetes Observability: Install Log Aggregation Stack on Kind Cluster

Main Methods for Kubernetes Log Aggregation

Kubernetes doesn’t come with a built-in solution for storing log data, so you’ll need to rely on third-party tools for cluster-wide logging [1][4]. Luckily, there are two main ways to centralise logs across your cluster, making it easier to monitor, troubleshoot, and analyse your workloads.

Each method operates differently, so it’s important to choose the one that aligns with your specific needs. Let’s explore the strengths and drawbacks of both approaches.

Node-Level Logging Agents

Node-level logging agents are one of the most efficient ways to aggregate logs in Kubernetes. These agents are deployed on every node in your cluster, typically as a DaemonSet, ensuring that each node runs a pod with the logging agent [1][2][3][6].

This method automatically collects logs from the stdout and stderr streams of all containers. Your applications can continue logging as usual, without requiring any changes to their code, as the logging agent handles everything [1][3][6]. This makes it a seamless solution for implementing a comprehensive logging setup.

Popular tools for this approach include Fluentd, Fluent Bit, and Filebeat. These agents gather logs from all containers on their respective nodes and forward them to a centralised storage system, such as Elasticsearch or a cloud-based logging service.

One major advantage of node-level agents is their low resource usage. Since there’s only one agent per node, this method is particularly cost-efficient for large clusters, where resource consumption can significantly impact expenses.

Another bonus is compatibility with kubectl. Logs collected by these agents remain accessible through the familiar kubectl logs command, allowing developers to debug without having to learn new tools or workflows [3].

Sidecar Logging Containers

For more granular control, sidecar logging containers offer a pod-specific approach. In this method, an additional container is deployed within the same pod as your application container [1][2][5]. This setup is especially useful for applications that write logs to files instead of stdout, or when you need different logging configurations for specific services.

Sidecar containers allow you to define custom logging rules at the pod level. This makes them ideal for handling legacy applications or services with unique logging requirements [2].

However, there’s a trade-off: higher resource consumption. Each pod now requires an additional container, which can add up quickly in a large cluster, leading to increased infrastructure costs [1][4][6].

Another consideration is compatibility. If your sidecar container uses a separate logging agent, its logs might not be accessible via the kubectl logs command, as they aren’t managed by the kubelet [1][6]. This could complicate debugging and require your team to adapt to new tools and processes.

| Aspect | Node-Level Agents | Sidecar Containers |

|---|---|---|

| Deployment | One DaemonSet per cluster | One container per pod |

| Resource Usage | Low (one agent per node) | High (extra container per pod) |

| Application Changes | None required | May require adjustments |

| kubectl logs Support | Full compatibility | Limited compatibility |

| Customisation | Cluster-wide configuration | Pod-level configuration |

Choosing between these methods depends on your priorities. Node-level agents are a great starting point for their simplicity and efficiency, while sidecar containers are better suited for specific use cases that demand more tailored logging setups. Many organisations adopt a mix of both, using node-level agents for general logging and sidecar containers for special cases.

Best Practices for Kubernetes Log Aggregation

Managing Kubernetes logs effectively requires thoughtful configuration, strong security measures, and efficient management practices. How you handle your logging setup directly affects your operational efficiency and overall costs. By following these practices, you can create a logging system that scales with your needs while meeting security and compliance requirements.

Centralised Logging Solutions

Centralised logging brings together logs from nodes, pods, and control plane components into a single location, making monitoring and troubleshooting much simpler. This includes data from containers and critical control plane elements like etcd, kube-apiserver, and kubelet [7, 9, 11].

One of the biggest advantages of centralised logging is automatic log persistence. Even when ephemeral containers or pods are terminated or evicted, their logs remain securely stored in the centralised system. This prevents the loss of critical data and ensures long-term availability [7, 8, 11].

Centralized logging helps access historical log data. With it, manually searching through the logs generated by different nodes or pods on a cluster becomes more manageable. Without a centralized logging system, the logs from a pod could be lost after terminating a pod.– Arfan Sharif, Product Marketing Lead for the Observability portfolio, CrowdStrike [7]

The operational benefits are clear. For example, in 2022, 64% of DevOps Pulse survey respondents reported that their Mean Time to Resolution (MTTR) during production incidents exceeded an hour, compared to 47% the previous year [8]. Centralised logging provides unified visibility across your infrastructure, enabling quicker issue correlation and analysis.

Standardised Log Formats

Once logs are centralised, maintaining consistency in their format is crucial for efficient analysis. A standardised log format makes it easier to search, filter, and analyse data across different services and teams. JSON has become a widely adopted format for structured logging, offering machine-readable output that integrates seamlessly with most log aggregation tools.

Structured logs eliminate the need to parse unformatted text, saving time and reducing errors. Instead of extracting data from free-form messages, logging tools can directly identify fields like timestamps, severity levels, service names, and error codes. This not only reduces processing time but also improves query performance.

Using consistent field naming across all applications is equally important. Common keys like timestamp, level, service, message, and trace_id ensure that logs from different teams follow the same pattern. This consistency is invaluable for correlating events across microservices or tracking requests in distributed systems.

Log Rotation and Retention Policies

Centralised and standardised logs can quickly grow in volume, so managing their lifecycle is essential. Without proper control, storage usage - and costs - can spiral out of control. Automated log rotation and retention policies are key to keeping things manageable.

Rotation schedules should align with the activity level of your services. High-traffic services may need daily rotation, while less active components might only require weekly schedules. Size-based rotation, such as limiting files to 100MB–1GB, provides additional protection against unexpected surges in log volume.

Retention periods vary depending on compliance needs. For example, UK businesses subject to GDPR may retain logs for one to two years, while financial institutions may require longer retention. A tiered storage approach can help: keep recent logs (e.g., the last 30 days) on fast storage, while archiving older logs to reduce costs.

Configuring retention policies at the application level offers more granular control. For instance, you might retain security audit logs longer than routine debug messages, ensuring compliance without unnecessary storage costs.

Securing Log Data

Logs often contain sensitive information, so protecting them - both in transit and at rest - is critical. Use Transport Layer Security (TLS) to encrypt communications between logging agents and centralised storage, preventing data interception during transmission.

For data stored at rest, encryption should be enabled at the storage level. Most enterprise logging platforms provide built-in encryption, but it's essential to ensure encryption keys are properly managed and rotated according to your organisation's security policies.

Role-Based Access Control (RBAC) is another vital measure. Define granular permissions so developers can access logs for their own services while restricting access to sensitive system logs or other teams’ data [8]. This reduces security risks and simplifies compliance audits. For UK organisations, GDPR compliance requires strict access controls, data retention policies, and anonymisation measures.

Resource Limits for Logging Agents

Logging agents can consume considerable CPU and memory resources, especially during high-traffic periods or when processing complex logs. Setting resource limits ensures that logging activities don’t interfere with application performance.

Memory limits should account for temporary traffic spikes. Start with 128–256MB per agent, though high-traffic environments may need more. Monitor actual usage patterns to adjust limits as needed.

CPU limits are equally important. If set too low, logging agents may fall behind and drop logs during peak periods. If set too high, they could compete with application workloads for resources. A good starting point is 100m–200m CPU (0.1–0.2 cores), with adjustments based on performance. Assigning QoS classes like 'Burstable' allows agents to access extra resources during spikes while maintaining a baseline allocation.

Preventing Sensitive Data in Logs

Even with secure handling, accidentally logging sensitive data can pose compliance and security risks. Data sanitisation at multiple levels is essential to prevent exposing sensitive information.

Start by configuring applications to avoid logging sensitive fields such as passwords, API keys, and personal identifiers. Structured logging provides better control over which fields are included in the output.

At the agent level, filtering can identify and mask patterns resembling sensitive data - like credit card numbers, email addresses, or API tokens - using regex to redact or hash values before transmission.

For UK organisations managing personal data, applying data minimisation principles helps ensure compliance with GDPR. Only log data essential for operations, and exclude or anonymise personal identifiers to reduce risks.

Log sampling can also be a useful strategy for high-volume, low-value data streams. Instead of capturing every debug message, sampling a representative subset reduces storage costs and processing overhead while still providing meaningful insights into system behaviour.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Kubernetes Log Aggregation Tools Comparison

Choosing the right log aggregation tool for your Kubernetes setup hinges on your operational priorities and resource constraints. The right tool can help you reap the rewards of a centralised logging strategy, as discussed earlier.

Fluentd is a widely-used open-source collector written in C and Ruby. It operates efficiently with around 40 MB of memory in its default setup and is trusted by over 2,000 organisations that rely on data-driven insights [9].

Fluent Bit is the lightweight sibling to Fluentd, tailored for environments with limited resources. Its simplified design makes it an excellent choice for edge deployments or high-density container environments.

Filebeat, developed by Elastic, is a node-level logging agent designed for simplicity and efficiency. Its ability to forward logs with minimal processing overhead is particularly appealing for teams already using the Elastic Stack.

Logstash is an open-source server-side pipeline for data processing. It offers advanced capabilities for transforming and enriching data, though this comes at the cost of higher CPU and memory usage. This trade-off is often worth it for teams dealing with complex log processing needs.

Tool Comparison Table

Here’s a quick breakdown of the key features of these tools, highlighting their differences in deployment and resource usage:

| Feature | Fluentd | Fluent Bit | Logstash | Filebeat |

|---|---|---|---|---|

| Type | Open-source data collector | Lightweight version of Fluentd | Free and open-source data processing pipeline | Node-level logging agent |

Pricing for these tools can vary. For example, Elastic Cloud starts at approximately £12/month, while other managed solutions often use tiered pricing based on log volume [9].

Resource consumption differs significantly across these tools. Fluent Bit’s minimalistic design makes it a cost-effective option for environments with tight budgets. On the other hand, Logstash’s robust log transformation capabilities can help streamline downstream storage and processing. Operational complexity also varies: Filebeat’s straightforward setup allows for quick deployment and low maintenance, Fluentd provides extensive customisation options, and Logstash’s domain-specific language can present a steeper learning curve.

All these tools support TLS encryption and can integrate with encrypted storage solutions, ensuring compliance with GDPR requirements.

When selecting the best tool for your Kubernetes environment, consider the balance between complexity, cost, and compliance. For example, teams already working within the Elastic ecosystem often find Filebeat to be a seamless choice. Meanwhile, high-volume environments might benefit from Fluent Bit’s lightweight nature, and more complex processing scenarios may require the advanced capabilities of Logstash or Fluentd. Choosing the right tool can significantly improve log aggregation efficiency and help manage costs.

For tailored advice on optimising your Kubernetes logging strategy and reducing operational expenses, you can consult Hokstad Consulting. They specialise in streamlining DevOps workflows, cloud infrastructure, and hosting cost strategies.

Advanced Techniques and Cost Reduction

For businesses in the UK navigating tight budgets and stringent regulations, advanced log aggregation techniques offer a way to improve operations while keeping costs in check. Building on the foundation of centralised logging, these techniques enhance efficiency and integrate seamlessly with tools like SIEM and monitoring platforms, offering better security and operational insights.

Integration with SIEM and Monitoring Platforms

Linking your Kubernetes log aggregation system with Security Information and Event Management (SIEM) platforms transforms raw logs into meaningful security insights. For organisations adhering to GDPR and other UK regulations, this integration is critical.

SIEM systems can process structured log data directly from your Kubernetes pipeline. By filtering and enriching logs before sending them to the SIEM, you can reduce the volume of data processed, which in turn lowers licensing costs.

Log correlation adds another layer of security by identifying potential threats early, providing contextual alerts without overloading the system. This preprocessing reduces the computational demands on your SIEM while improving the relevance of alerts.

Real-time alerting becomes more impactful when logging systems are configured to detect key patterns - such as repeated container restarts, unusual spikes in resource usage, or failed login attempts. These alerts can feed directly into monitoring platforms, creating a comprehensive observability strategy that keeps you ahead of potential issues.

Custom Solutions and Automation

In hybrid or multi-cloud environments, automation can bridge the gaps between cloud providers and on-premises systems. Customisation plays a big role here.

- Custom log routing: Direct logs to appropriate destinations based on their content or source. For instance, financial transaction logs might need to be stored in highly secure, UK-based storage systems, while general application logs could be sent to a more cost-effective cloud solution.

- Automated log parsing and transformation: Use custom parsers to extract key fields and normalise data before storage. This reduces downstream processing demands and simplifies analysis.

- Log sampling: For high-volume applications, algorithms can sample routine logs while ensuring all errors and unusual patterns are fully captured. This keeps storage and processing costs in check without sacrificing visibility.

Using Infrastructure as Code (IaC) tools like Terraform or Ansible can standardise logging configurations across environments. These tools ensure consistency, reduce manual errors, and speed up deployment. By automating these processes, businesses can streamline workflows and achieve noticeable cost savings.

Reducing Log Aggregation Costs

Kubernetes environments can quickly rack up costs when it comes to log aggregation, but a few strategic measures can help you save money while maintaining visibility.

- Tiered storage: Keep recent logs in high-performance storage for quick access and archive older logs to more economical storage options. This balances cost and compliance needs.

- Compression and local storage: Compress logs and store them in UK data centres to minimise egress charges and meet GDPR requirements.

- Smart filtering: Instead of capturing every log entry, focus on critical events - like errors, security incidents, or key business transactions. This targeted approach reduces storage needs without losing essential insights.

- Off-peak processing: Schedule log processing during less busy hours to take advantage of lower costs.

For businesses looking to refine their Kubernetes logging strategies, Hokstad Consulting offers tailored solutions. Their expertise in cloud cost engineering and DevOps transformation has helped UK companies cut cloud expenses by 30–50%. Their services include comprehensive logging cost analysis and automation solutions designed specifically for UK requirements.

Automated cost monitoring is another way to keep budgets in check. By setting alerts for when logging expenses exceed thresholds and using automated scaling to adjust log retention periods, businesses can avoid unexpected spikes in costs while ensuring they maintain adequate coverage for their operational needs.

Conclusion

Mastering Kubernetes log aggregation can greatly influence your organisation’s ability to operate efficiently, maintain security, and meet compliance requirements. The strategies shared in this guide offer a clear path to transforming scattered log data into meaningful insights while keeping costs under control.

At the heart of this process is a well-structured log aggregation framework. By leveraging centralised logging tools, adopting standardised formats, and incorporating robust security measures, you lay the groundwork for a system that can scale with your needs. Adding advanced methods like SIEM integration and automated cost management elevates this from mere log handling to a forward-thinking observability strategy capable of identifying issues before they reach your customers.

For UK businesses, the stakes are especially high with GDPR. A carefully designed logging system can be the difference between resolving incidents quickly and enduring prolonged downtime, between staying compliant and facing penalties, or between managing cloud expenses effectively and exceeding budgets.

The tiered storage models, intelligent filtering, and automated scaling techniques discussed here have already demonstrated their effectiveness in real-world applications. Many organisations adopting these approaches report immediate benefits, such as improved operational transparency and reduced costs.

Hokstad Consulting has helped numerous UK companies put these strategies into action. Their expertise extends beyond selecting the right tools - they focus on building scalable, cost-effective systems tailored to meet UK-specific security and compliance requirements.

FAQs

What’s the difference between node-level logging agents and sidecar logging containers for Kubernetes log aggregation?

Node-level logging agents work at the node level and are usually deployed as DaemonSets. They gather logs from all the containers running on a node and send them to a centralised location. This setup is easier to manage across the entire cluster and avoids the need to deploy multiple agents for each pod.

On the other hand, sidecar logging containers are deployed within the same pod as the application containers. These act as dedicated log collectors for that specific pod, offering more control and isolation. However, this approach can lead to higher resource consumption and added complexity, especially in larger Kubernetes clusters. Deciding between these methods depends on what you prioritise - scalability, resource efficiency, or detailed log management.

How can businesses in the UK manage Kubernetes logs while staying GDPR compliant?

To manage Kubernetes logs while adhering to GDPR regulations, UK businesses should take several important steps. First, encrypt data both when it's stored and during transmission. This ensures personal information remains protected from unauthorised access.

It's equally important to implement role-based access controls. By restricting log access to only those who need it, you reduce the risk of sensitive data being mishandled.

Another key practice is regular auditing and monitoring of logs. This helps demonstrate compliance and maintain accountability. Alongside this, keeping detailed audit trails of who accessed or modified logs provides transparency and supports GDPR requirements. Not only does this approach help with compliance, but it also boosts overall data security.

How can I minimise costs when managing Kubernetes log aggregation?

To keep Kubernetes log aggregation costs under control, one effective approach is to adopt tiered storage solutions. This means keeping recent logs on high-speed storage for easy access, while moving older logs to more affordable storage options. Pair this with log retention policies that automatically archive or delete outdated logs, and you'll see a reduction in storage expenses over time.

Another smart move is implementing log level filtering. By capturing only the most relevant data and cutting out excessive or overly detailed logs, you can significantly reduce the amount of storage needed. When you combine these tactics with centralised log collection and regular log rotation, you create a streamlined, budget-friendly system for managing Kubernetes logs efficiently.