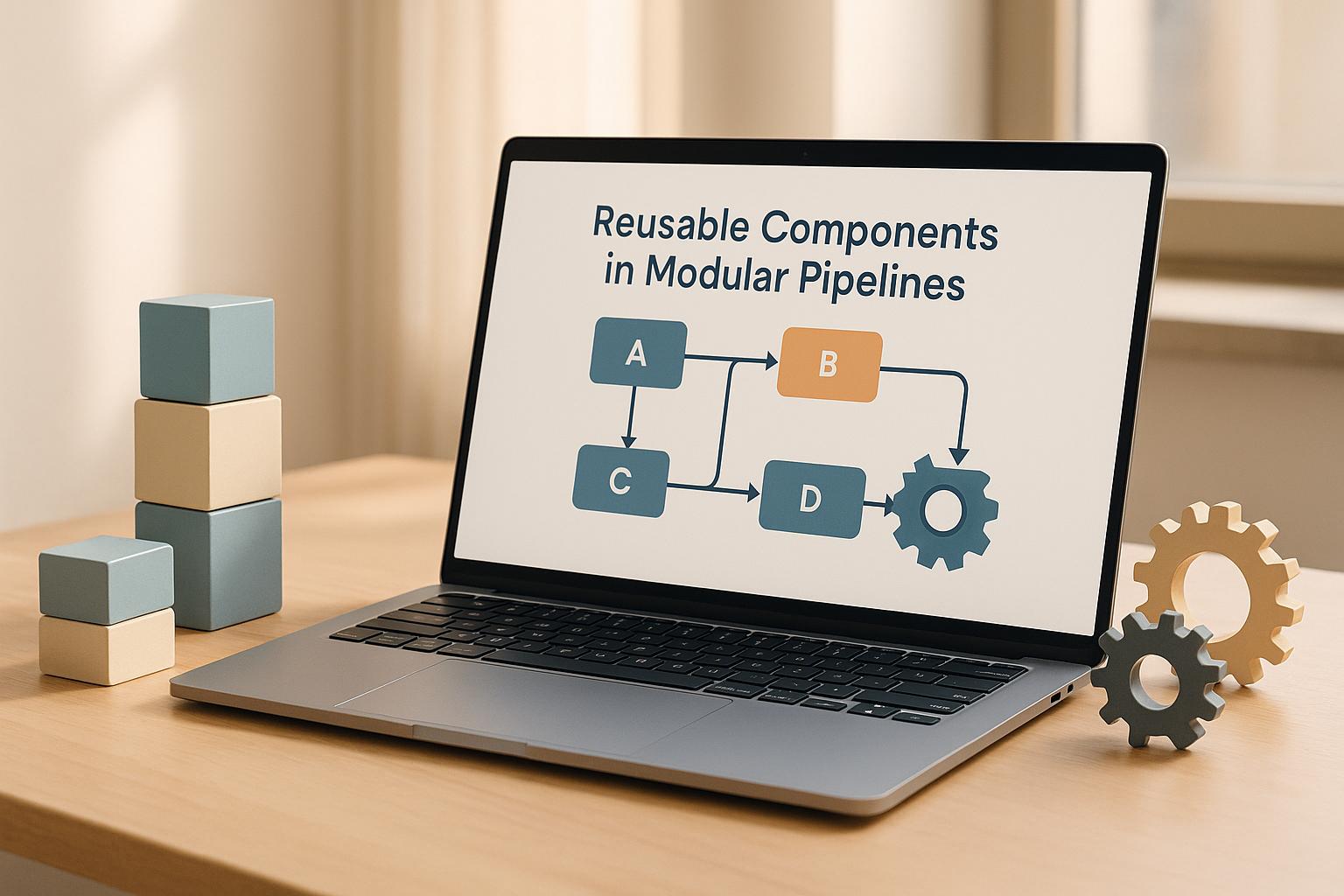

Reusable components in modular CI/CD pipelines simplify complex workflows, reduce redundancy, and improve efficiency. By breaking down processes like testing, security checks, and deployments into smaller, reusable modules, teams can save time, reduce costs, and maintain consistency across projects.

Key points:

- Modular pipelines use self-contained units with clear inputs/outputs, offering flexibility for different projects.

- Reusable components cut down on repetitive work, ensure consistent execution, and lower maintenance costs.

- Version control (e.g., semantic versioning) and documentation are crucial for managing updates and ensuring compatibility.

- Testing and validation ensure reliability, with automated checks catching issues early.

- Centralised libraries make components easy to find, share, and maintain.

- UK-specific concerns like GDPR compliance, cost control, and data sovereignty can be addressed by embedding regulatory checks and cost-monitoring features into components.

This approach is especially useful for UK organisations managing tight budgets and strict regulations. Starting small and working with DevOps experts can help streamline the transition to modular pipelines while ensuring long-term success.

Talk: Efficient DevSecOps workflows with reusable CI/CD components

Core Principles of Reusable Component Design

Creating reusable components that consistently perform across various projects and teams requires a solid foundation of design principles. These principles not only ensure reliability but also streamline workflows, cutting down on unnecessary revisions and simplifying integration processes.

Standard Interfaces and Documentation

Every component should have clearly defined inputs, outputs, and configuration parameters that remain stable across versions. This consistency eliminates guesswork and makes it easier for teams to integrate components seamlessly into different environments.

For instance, a recent case study highlighted how using standardised interfaces within a design guideline, later transformed into a reusable code library, improved both flexibility and visual uniformity. Props-driven customisation further allowed for tailored functionality while maintaining a cohesive design.

Documentation plays a key role in making components effective. It should include detailed usage instructions, design principles, configuration options, and troubleshooting guides. A well-documented component serves as a single source of truth, reducing confusion and speeding up onboarding for new team members. Tailor your documentation to suit different audiences - developers need in-depth technical details, while project managers benefit from high-level summaries of functionality.

A design system is a toolkit containing various resources to help developers and designers create consistent user experiences that authentically represent the brand.- Frontify

Studies show that combining structured documentation with proper training significantly reduces errors and encourages faster adoption of new systems.

Once your interfaces and documentation are solid, the next step is managing component versions effectively.

Version Control and Compatibility Management

Using semantic versioning can prevent the chaos often associated with component updates. A clear versioning format - major.minor.patch - helps teams understand the scope of changes: major versions introduce breaking changes, minor versions add features, and patch versions address bugs. This clarity ensures teams can update components with confidence, knowing exactly what to expect.

Planning for backward compatibility is another critical step. When releasing new versions, assess how changes will affect existing implementations. Provide migration guides and issue deprecation warnings well in advance of retiring old functionality. This approach minimises disruptions and avoids costly fixes later on.

With versioning in place, the focus shifts to rigorous testing and validation.

Testing and Validation

To ensure reliability, implement both unit tests and integration tests as part of your continuous integration pipelines. Automated validation can catch regressions early, benchmark performance, and enforce quality standards. These tests should cover areas like code coverage, security, documentation, and overall performance.

Testing with one user early in the project is better than testing with 50 near the end.- Jakob Nielsen, Principal of Nielsen Norman Group

Monitoring resource usage and execution times is equally important. Set performance thresholds and trigger alerts if they are exceeded, allowing you to quickly identify and address regressions. This process not only builds confidence in updates but also helps optimise performance and manage costs.

Establishing quality gates ensures that only well-tested, dependable components make it into your library. These gates might include minimum code coverage, passing security scans, comprehensive documentation, and meeting performance benchmarks. By enforcing these standards, you ensure that every component is robust and ready for use.

Building and Managing Reusable Components

Creating and managing reusable components effectively requires a structured approach that emphasises organisation, maintenance, and scalability. By implementing systematic storage and clear processes, teams can build systems that grow alongside their organisation while remaining straightforward to adopt. A key step in this process is developing centralised component libraries to streamline and standardise development efforts.

Creating and Sharing Component Libraries

A centralised library acts as the go-to resource where teams can find, evaluate, and integrate pre-built solutions. This prevents wasted effort on recreating functionality simply because existing components are hard to locate.

To make these libraries effective, establish clear naming conventions and categorisation systems. Group components based on their purpose, such as deployment patterns, testing utilities, security checks, or infrastructure provisioning. Each component should include detailed metadata, such as its purpose, dependencies, compatibility requirements, and usage examples, to help teams understand and adopt it quickly.

Automating publishing pipelines can significantly simplify library management. Set up automated workflows that publish components as soon as they pass quality checks. This ensures the library stays current without requiring manual intervention. For critical components, implement approval processes to maintain oversight, while allowing quicker updates for minor fixes or non-breaking changes.

Security is another key consideration. Role-based permissions can help protect sensitive components while still enabling authorised users to discover and access them. As the library grows, tracking usage data becomes crucial. Metrics like adoption rates, performance, and integration patterns can highlight which components are beneficial, which need refining, and where new components could address recurring issues. By combining these insights with shared libraries, teams can also leverage nested and template pipelines to further optimise their workflows.

Using Nested and Template Pipelines

Nested pipelines simplify complex workflows by breaking them into smaller, manageable parts. A parent pipeline coordinates multiple child pipelines, each handling specific tasks like testing, security scans, or deployments across environments. This layered approach keeps individual pipelines straightforward while supporting advanced deployment processes.

Template pipelines, on the other hand, act as flexible blueprints that teams can adapt to their needs. Instead of duplicating and editing existing pipelines, teams can reference templates and customise them with configuration values. This ensures consistency across deployments while allowing for necessary adjustments.

For example, templates can be created for common scenarios like microservice deployments, database migrations, infrastructure provisioning, or security compliance checks. Each template should include sensible defaults that work for most cases, along with clear documentation on customisation options. Teams can start with minimal configuration and scale up to more advanced settings as needed.

Nested pipelines also offer flexibility for projects of varying complexity. Simpler projects can stick to basic templates with default settings, while more intricate applications can use multiple nested pipelines with tailored logic. However, managing versions of nested and template pipelines requires careful planning. Changes to a parent template can impact all dependent pipelines, so thorough testing in staging environments is essential before rolling updates into production. Providing multiple template versions gives teams the freedom to adopt changes at their own pace.

Component Lifecycle Management

Without proper lifecycle management, component libraries can quickly become cluttered with outdated, insecure, or redundant elements. A well-maintained library requires regular updates, clear deprecation policies, and robust cataloguing systems.

Regular review cycles are essential to balance stability with progress. Component maintainers should assess performance, security, and relevance periodically. Components with declining usage or high maintenance costs may need to be consolidated or retired, while heavily used components should receive updates to enhance their performance and functionality.

When retiring components, establish clear deprecation timelines and provide detailed migration guides. Teams typically need 90 to 180 days' notice, depending on the component's importance and the complexity of migration. Migration guides should not only explain what changes are needed but also highlight the benefits of those changes. Automated tools that identify deprecated components across teams can help track migration progress and ensure smooth transitions.

As libraries grow, effective cataloguing becomes critical. Search functionality should go beyond basic name matching, allowing teams to discover components by describing their needs. Features like tagging, capability matrices, and real-world usage examples can make discovery easier and more intuitive.

Ownership models are also vital. Assign specific maintainers to each component, responsible for updates, bug fixes, and user support. Encouraging community contributions can distribute the maintenance workload and ensure components evolve based on actual user requirements.

Finally, monitoring component health across the organisation provides valuable insights for lifecycle decisions. Metrics such as adoption rates, performance data, security scan results, and support requests can help identify components that need attention. For organisations in regulated industries, lifecycle management should also include compliance tracking. Maintaining records of component versions, security assessments, and approval statuses ensures traceability and supports audit requirements. By enforcing strict lifecycle management, organisations can minimise downtime, reduce maintenance costs, and ensure their libraries remain reliable and up to date.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Benefits and Challenges of Modular Pipelines

Modular pipelines are reshaping CI/CD processes by simplifying workflows, though they come with their own set of trade-offs that teams need to carefully manage.

Comparing Benefits and Drawbacks

Deciding whether to adopt modular pipelines involves weighing their impact on development workflows, team productivity, and operational costs. Below is a breakdown of the advantages and challenges:

| Benefits | Challenges |

|---|---|

| Faster Development Cycles: Reusing pre-built components speeds up development, reducing the need to create pipelines from scratch. | Initial Setup Complexity: Designing and documenting a library of reusable components requires upfront time and effort. |

| Consistent Quality Standards: Standardised components ensure uniform application of security checks, testing, and deployment practices across projects. | Version Management Overhead: Maintaining compatibility between components and dependent pipelines can become a time-consuming task. |

| Reduced Maintenance Effort: Fixes or updates to a single component automatically benefit all pipelines that use it. | Learning Curve: Teams may need training to understand component libraries, templates, and modular architecture. |

| Improved Cost Visibility: Centralised components make it easier to monitor resource usage and optimise cloud spending. | Documentation Demands: Comprehensive documentation, including usage examples and migration guides, is essential for effective use. |

| Scalability: Reusing infrastructure patterns allows organisations to scale projects without significantly increasing DevOps workload. | Dependency Complexity: Managing interdependencies between components can lead to complications when changes are required. |

While these trade-offs can initially slow productivity, the long-term benefits often outweigh the challenges. A phased approach - starting with smaller, non-critical projects - can help teams adjust gradually, refine their processes, and build confidence in their modular designs.

UK-Specific Considerations

For organisations in the UK, adopting modular pipelines comes with unique factors, particularly around cost control, regulatory compliance, and data sovereignty. Reusable components play a key role in addressing these areas.

Cost Optimisation

In an unpredictable economic climate, UK businesses are under pressure to manage cloud spending effectively. Modular pipelines help by standardising resource allocation, offering better visibility into costs, and enabling teams to track expenses in pounds sterling. Components with built-in cost monitoring and resource limits make it easier to identify savings opportunities. Additionally, organisations can leverage regional pricing differences across cloud providers by abstracting deployment logic into reusable components, allowing teams to experiment with cost-efficient regions.

Regulatory Compliance

Compliance with GDPR, financial regulations, and government security standards is a critical concern for UK organisations. Modular pipelines can embed compliance checks directly into reusable components, ensuring consistent application of data protection measures, audit logging, and security controls. However, meeting specific regulatory requirements - such as UK-based data residency mandates - can restrict design flexibility and require careful planning.

Data Sovereignty

Data sovereignty is another key consideration. Components that handle sensitive information must include robust controls to ensure data remains within the required geographical boundaries. This affects everything from database deployments to backup and disaster recovery strategies, requiring careful alignment with UK-specific regulations.

Skills and Training

With thriving tech hubs in cities like London, Manchester, and Edinburgh, the demand for skilled DevOps professionals is high. To make modular systems successful, organisations must invest in thorough documentation and training programmes to equip their teams with the necessary expertise.

For UK organisations looking to implement modular pipelines while managing these challenges, working with specialists in DevOps transformation and cloud cost management - such as Hokstad Consulting (https://hokstadconsulting.com) - can provide valuable guidance. Their expertise can help navigate both technical complexities and regulatory demands, ensuring a smooth transition to modular systems.

Best Practices for UK Organisations

Implementing reusable components in modular pipelines requires a well-planned strategy that considers the specific needs and challenges of UK businesses. Here’s how organisations can optimise their approach while navigating regulatory, financial, and operational factors.

Adopt a Modular Architecture

Designing a modular, plugin-based architecture is key. Each pipeline element should be isolated, allowing for independent updates, replacements, or combinations. Common patterns - like security scans, compliance checks, and deployments - are ideal candidates for modularisation.

Every component should have clear input and output specifications. For example, a compliance checking module might take project metadata and regulatory standards as inputs, then produce a compliance report and a pass/fail status. This clarity simplifies testing, debugging, and maintenance.

A centralised registry for components can prevent duplication and encourage organisation-wide improvements. Include useful metadata, such as supported platforms, version compatibility, and usage examples, to help teams choose the right components.

Semantic versioning is another essential practice. Increment major version numbers for changes that break compatibility and minor ones for backward-compatible updates. This helps teams quickly identify whether updates require pipeline adjustments.

Once components are established, ensure quality and consistency through thorough documentation and code reviews.

Enforce Documentation and Code Reviews

Good documentation and rigorous reviews are vital for maintaining high standards. Provide detailed usage guides with real-world examples that show how components can be configured, troubleshoot issues, or migrate effectively. Abstract examples often lack the context teams need to apply them in their workflows.

Code reviews should be mandatory for all changes, no matter how small. These reviews should evaluate backward compatibility, documentation updates, and the potential impact on dependent pipelines. Involving team members who actively use the components ensures that changes address practical needs.

Document the architectural decisions and trade-offs made during the design process. This record helps future maintainers understand why certain choices were made and avoid reintroducing issues the original designers worked to prevent.

Maintain change logs that explain the business impact of updates, not just technical details. Teams need to know how changes affect their workflows, whether new features are available, and if any action is required on their part.

Create onboarding materials to help new team members understand the modular system. Include diagrams illustrating how components interact, common patterns for combining modules, and guidelines for contributing improvements to the shared library.

Use Expert Consulting Services

Transitioning to modular pipelines often demands expertise that goes beyond standard development skills. Consulting specialists can help organisations navigate the complexities of this shift, particularly when dealing with UK-specific regulations and cost management.

Hokstad Consulting (https://hokstadconsulting.com) offers tailored services for DevOps transformation and cloud cost engineering. Their expertise in automated CI/CD pipelines and custom development ensures that UK businesses can avoid common pitfalls and build efficient, reusable systems.

By embedding cost-optimisation logic into pipeline modules, Hokstad Consulting ensures every project benefits without requiring teams to become pricing experts. Their zero-downtime migration strategies are particularly valuable when shifting from monolithic to modular architectures, allowing workflows to continue seamlessly during the transition.

For organisations seeking ongoing support, their retainer-based model provides access to DevOps expertise without the need to hire full-time specialists. This approach works well for modular pipelines, where periodic reviews and optimisations can significantly enhance performance and reduce expenses.

Their no savings, no fee

pricing model aligns their goals with those of the organisation, minimising financial risk and ensuring measurable outcomes. This can be particularly compelling for businesses looking to justify their investment in modular pipeline transformations.

Partnering with experts like Hokstad Consulting also helps address the skills gap many UK organisations face. Instead of building expertise from scratch, teams can accelerate their progress through hands-on collaboration while gaining valuable knowledge to sustain long-term improvements.

Conclusion

Reusable components in modular CI/CD pipelines are changing the way UK organisations handle software delivery. Moving away from monolithic systems towards modular approaches is central to modernising pipelines. By breaking down complex deployment processes into standardised, interchangeable modules, businesses can speed up deployment cycles, lower maintenance demands, and improve system reliability.

However, success hinges on good design and management. Clear interfaces, thorough documentation, and strong version control are the backbone of effective modular systems. Without these, modular setups can become unnecessarily complicated. Practices like semantic versioning, centralised registries, and rigorous testing help ensure components remain dependable and compatible across different teams and projects.

UK-specific challenges, such as GDPR compliance, financial regulations, and managing cloud costs in a competitive market, must also shape how these modules are designed. Embedding compliance checks and cost-management features directly into reusable components helps organisations stay efficient while meeting regulatory demands.

Adopting modular pipelines also requires a shift in team culture. Instead of working in isolated silos, teams need to embrace collaboration and shared ownership. This involves setting up processes like code reviews, maintaining shared documentation, and developing onboarding resources to help new team members navigate the modular system.

For many UK companies, working with specialist consultants is the quickest route to success. Firms like Hokstad Consulting offer expertise to streamline implementation. Their no savings, no fee

model is particularly attractive to businesses looking for measurable returns on their investment in modular pipelines.

The advantages of a well-executed modular pipeline - faster deployments, reduced maintenance, and improved reliability - make this approach a smart move for UK organisations. Achieving these benefits requires careful planning, the right tools, and expert guidance. By committing to this strategic transformation, UK businesses can not only meet their technical needs but also gain a competitive edge.

FAQs

How can reusable components in modular pipelines support GDPR compliance and data sovereignty for UK organisations?

Reusable components in modular pipelines play a key role in helping UK organisations stay GDPR-compliant and maintain data sovereignty. By standardising and securing how data is handled, these components ensure personal data is processed in line with UK-specific legal requirements, such as data localisation and protection laws.

With modular pipelines, organisations can simplify how data is reused and shared while keeping full control over where it is stored and processed. This method reduces the risks tied to cross-border data transfers and aligns with the UK's emphasis on safeguarding personal data within its borders. It also makes audits less complicated and eases the challenge of keeping up with changing regulations, paving the way for quicker and safer implementations.

What challenges do organisations face when implementing modular pipelines, and how can they address them?

Implementing modular pipelines often comes with its fair share of hurdles. Common issues include integration difficulties, scalability limitations, and the challenge of managing complex dependencies. These obstacles can slow down deployment processes and compromise system reliability.

To overcome these challenges, organisations should prioritise designing clear, standardised interfaces that simplify communication between components. Embracing containerisation can also help maintain consistency across different environments. Furthermore, incorporating comprehensive testing and automation into the workflow ensures smoother operations. It's equally important to avoid unnecessary over-complication and to structure reusable components thoughtfully, which can make systems more adaptable and easier to maintain.

By focusing on these strategies, businesses can optimise their CI/CD pipelines, enabling faster and more dependable deployments.

How can organisations in the UK manage version control and ensure compatibility when using reusable components in their CI/CD pipelines?

Organisations in the UK can simplify version control and maintain compatibility in their CI/CD pipelines by using semantic versioning (SemVer). This method provides a clear framework for tracking updates and managing dependencies, making changes to reusable components more transparent and easier to predict.

To take this a step further, incorporating automated dependency management tools into the pipeline can be a game-changer. These tools check compatibility during every build or deployment, ensuring all components function smoothly together. This helps to minimise integration hiccups and supports more dependable rollouts.

By adopting these strategies, UK organisations can create more efficient workflows, ensure consistency across projects, and achieve smoother deployments.