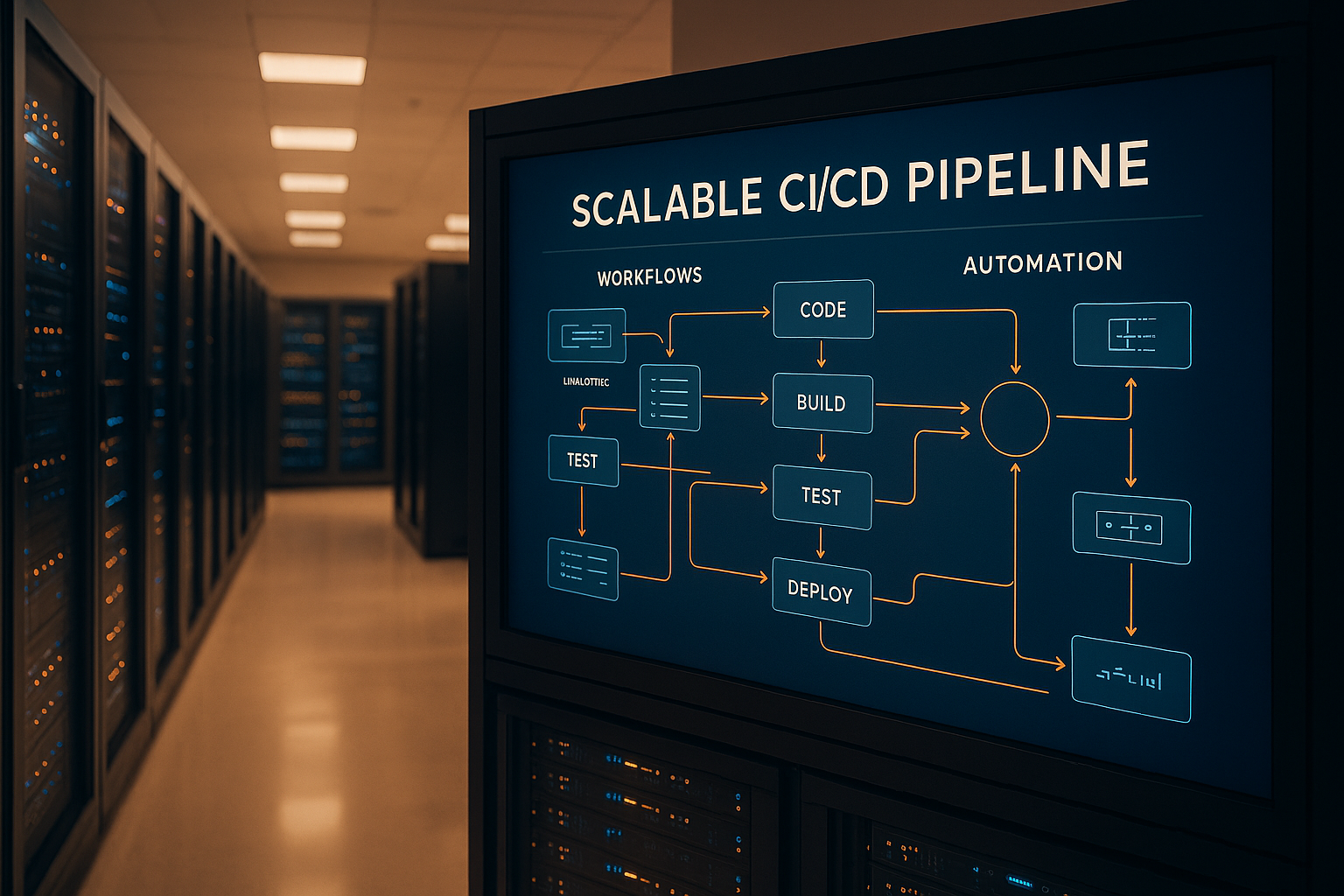

Want faster, reliable software delivery at scale? Enterprises need CI/CD pipelines that can handle large teams, complex systems, and compliance requirements. This guide explains how to design pipelines that integrate automation, security, and cost efficiency, while addressing challenges like high deployment volumes, diverse environments, and regulatory needs.

Key Takeaways:

- Pipeline Patterns: Multi-stage pipelines for compliance, parallel execution for speed, microservice-oriented for flexibility, self-service for decentralised teams, and fan-out/fan-in for global deployments.

- Core Components: Version control (e.g., Git), automated builds, containerisation (Docker, Kubernetes), testing frameworks, and monitoring tools.

- Best Practices: Use a single artifact strategy, manage secrets securely, implement infrastructure as code (IaC), and optimise resources to control costs.

- Tooling Options: Jenkins for custom workflows, GitLab for integration, GitHub Actions for cloud-native teams, and Kubernetes-native tools like Tekton for container-first strategies.

- Governance & Cost Control: Automate compliance, enforce access controls, track costs by project, and use metrics to continuously improve pipeline performance.

Bottom line: Scalable CI/CD pipelines empower enterprises to deliver software faster without sacrificing quality, security, or cost control. The right design patterns, tools, and practices make all the difference.

Enterprise CI/CD - Scaling the Build Pipeline at Home Depot - Matt MacKenny, Home Depot

CI/CD Pipeline Design Patterns for Large Organisations

Large organisations often face the challenge of managing complex workflows, diverse deployment scenarios, and multiple teams. To tackle this, they need well-structured pipeline architectures that support scalability, maintainability, and efficiency across their CI/CD operations.

Core Design Patterns for Enterprise Pipelines

Multi-Stage Pipeline Pattern

This pattern breaks the delivery process into distinct phases, each with specific roles and quality checks. It typically includes development, testing, staging, and production environments. Each stage focuses on unique tests, avoiding redundancy and ensuring a clear separation of concerns.

For organisations with strict compliance demands, this pattern is ideal as it naturally incorporates checkpoints for approvals and audits. Its stage-based structure also makes troubleshooting easier. However, if not optimised, it can become time-consuming since stages are executed sequentially.

Parallel Execution Pattern

When speed is a priority, this pattern allows multiple tasks to run at the same time, reducing overall pipeline execution time. For instance, unit tests, security scans, and code quality checks can run concurrently, provided they don’t depend on each other.

While this approach can significantly cut down deployment times, it requires careful dependency management. Teams must identify which tasks can run independently. The complexity of managing concurrent streams increases with scale, but the time saved often makes it worthwhile.

Microservice-Oriented Pipeline Pattern

In organisations using microservice architectures, this pattern is essential. Instead of deploying a single monolithic application, teams manage numerous independent services, each with its own pipeline and deployment lifecycle.

This approach enables teams to deploy services independently, reducing the risk of widespread issues and allowing more frequent updates. However, it also demands integrated solutions for service discovery, load balancing, and monitoring inter-service dependencies.

Self-Service Pipeline Pattern

As organisations grow, centralised pipeline management can become a bottleneck. The self-service pattern addresses this by enabling development teams to create and manage their own pipelines within predefined guardrails.

Using standardised templates and components provided by platform teams, development teams can customise pipelines to meet their specific needs. This decentralised approach accelerates iteration cycles but requires strong governance to maintain consistency and compliance.

Fan-Out/Fan-In Pattern

This pattern is particularly useful for deployments that need to coordinate across multiple components, environments, or regions. It involves splitting the pipeline into parallel streams (fan-out) and then converging them (fan-in) before proceeding.

Ideal for global deployments or complex integrations, the fan-out/fan-in pattern ensures minimal downtime and synchronisation across systems. It’s especially effective for scenarios requiring updates across multiple regions or dependent systems.

These patterns form the foundation of scalable CI/CD pipelines. The next step is determining which one aligns best with your organisation’s needs.

Choosing the Right Pipeline Pattern

The choice of pipeline pattern depends on several factors, including team structure, application architecture, compliance needs, and performance goals. Here’s a quick comparison to help guide the decision:

| Pattern | Best For | Scalability | Complexity | Time to Deploy |

|---|---|---|---|---|

| Multi-Stage | Regulated industries, clear approvals | Medium | Low | Slower |

| Parallel Execution | Fast feedback, independent components | High | Medium | Faster |

| Microservice-Oriented | Distributed architectures, autonomous teams | Very High | High | Variable |

| Self-Service | Large teams, diverse requirements | Very High | Medium | Faster |

| Fan-Out/Fan-In | Global deployments, coordinated releases | High | High | Medium |

In practice, large organisations often adopt hybrid approaches. For example, a microservice-oriented pipeline may use parallel execution within individual services while employing a fan-out/fan-in strategy for coordinated global releases.

The maturity of your organisation also plays a role. Teams new to CI/CD might start with simpler multi-stage patterns, while more experienced teams can handle complex designs. Infrastructure is another critical factor - patterns like parallel execution and fan-out/fan-in require robust computing resources and advanced orchestration tools.

Compliance needs can further influence the choice. Industries with strict regulations may lean towards multi-stage pipelines for their clear audit trails, even if it means sacrificing speed.

For organisations seeking to optimise their CI/CD pipelines, expert advice from Hokstad Consulting can help implement tailored patterns that enhance both reliability and efficiency.

Components and Best Practices for Scalable Pipelines

Creating scalable CI/CD pipelines means combining key components and proven practices to achieve a balance between efficiency and reliability.

Core Components of Enterprise CI/CD Pipelines

Source Code Management and Version Control

A solid version control system is the backbone of any enterprise pipeline. Git-based platforms are popular choices, offering tools like branching strategies and merge controls that allow large teams to work smoothly. Choosing a branching policy - whether trunk-based or GitFlow - depends on your team's release schedule.

For organisations deploying multiple times a day, trunk-based development is often ideal. On the other hand, GitFlow is better suited for teams with planned releases and longer testing cycles.

Automated Build Systems

Automating the build process is essential for handling diverse codebases and managing dependencies. Enterprise solutions should support multiple programming languages, ensure consistent builds, and manage complex dependencies.

Key tasks like compilation, dependency resolution, static analysis, and testing should be automated. Leveraging caching and running builds in parallel can significantly cut down build times.

Containerisation and Orchestration

Using containerisation tools like Docker alongside orchestration platforms like Kubernetes ensures consistent environments from development to production.

Container registries act as central hubs for storing and distributing container images. They also provide features like version control, security scanning, and access management. Private registries add an extra layer of security for proprietary applications.

Artifact Repositories and Management

Artifact repositories store build outputs, libraries, and dependencies in one place. They support formats like Maven, npm, NuGet, and Docker images. Enterprise-level repositories often include features like vulnerability scanning, licence compliance checks, and workflows for promoting builds between environments.

Using versioning strategies and retention policies ensures proper artifact management. Immutable artifacts guarantee that the tested version is the same one deployed to production.

Testing Frameworks

Testing should cover multiple levels, from unit tests to end-to-end evaluations, balancing speed and thoroughness. Integration testing ensures components work together, while performance testing checks whether the application can handle expected loads. Security testing, including static and dynamic scans, helps identify vulnerabilities before release.

Monitoring and Observability Tools

Monitoring tools track metrics like build success rates, deployment frequency, and recovery times. Observability tools, including logs, metrics, and traces, help diagnose problems quickly and drive improvements. These tools are vital for both the pipeline and the applications running in production.

Best Practices for Scalability and Reliability

Building on these components, the following practices help ensure pipelines remain efficient and scalable.

Single Artifact Strategy

By building an artifact once and promoting it through all environments, teams can eliminate inconsistencies and save time. This ensures that what is tested in staging is exactly what gets deployed in production.

Secrets and Configuration Management

Sensitive data such as API keys and database credentials should never appear in code or build artifacts. Tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault securely store and manage access to these secrets.

Separating environment-specific configurations from application logic is equally vital. Tools like Helm for Kubernetes or AWS Parameter Store make it easier to manage configurations securely across environments.

Infrastructure as Code (IaC)

Treating infrastructure as code allows teams to version, test, and deploy infrastructure in the same way they handle application code. Tools like Terraform, AWS CloudFormation, and Azure Resource Manager templates make deployments consistent and repeatable.

IaC also helps reduce configuration drift, supports disaster recovery, and provides an audit trail for compliance.

Pipeline Visibility and Metrics

Dashboards provide real-time insights into pipeline health, showing metrics like build success rates and deployment lead times. Automated notifications keep stakeholders informed without overwhelming them, focusing on actionable events for faster responses.

Failure Recovery and Rollback Strategies

Automated rollback mechanisms allow teams to revert to previous versions quickly when issues occur. Deployment strategies like blue-green and canary releases reduce risk and allow for rapid recovery. Tools like circuit breakers and health checks prevent failures from spreading across systems, especially in microservice architectures.

Resource Optimisation and Cost Control

Efficient resource usage is key to balancing performance and cost. Optimising build times with caching and parallel execution can reduce expenses and improve developer productivity. Auto-scaling build agents ensure resources are available when needed but avoid wasting capacity.

Regular audits can identify unused resources and inefficient processes, helping teams fine-tune configurations based on actual usage.

Best Practices Quick Reference Table

| Practice | Scalability Impact | Cost Impact | Risk Mitigation | Implementation Effort |

|---|---|---|---|---|

| Single Artifact Strategy | High | Medium | High | Low |

| Secrets Management | Medium | Low | Very High | Medium |

| Infrastructure as Code | Very High | High | High | High |

| Automated Testing | High | Medium | Very High | Medium |

| Pipeline Monitoring | High | Low | High | Low |

| Failure Recovery | Medium | Low | Very High | High |

| Resource Optimisation | Medium | Very High | Low | Medium |

| Configuration Management | High | Low | High | Medium |

These components and practices are the building blocks of scalable CI/CD pipelines for enterprises. For organisations embarking on DevOps transformations, expert guidance can be invaluable. Hokstad Consulting specialises in fine-tuning DevOps processes, helping businesses streamline costs and improve deployment efficiency.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Tools and Automation for Enterprise Pipelines

Choosing the right tools and automation strategies can be the difference between a pipeline that falters under enterprise demands and one that scales efficiently. Modern enterprises need platforms capable of handling complex workflows at scale. By building on core components, the tools and practices outlined below can further streamline enterprise pipelines.

Enterprise CI/CD Tools

Jenkins for Large-Scale Operations

Jenkins remains a staple in many enterprise environments, especially where customisation and control are critical. Its vast plugin ecosystem supports integration with nearly any tool, while its distributed build capabilities allow horizontal scaling by adding build agents across servers or cloud instances.

With Jenkins Pipeline as Code, teams can define their entire build process using version-controlled Groovy scripts. This approach supports complex workflows, including conditional deployments, parallel executions, and integrations with legacy systems.

GitLab CI/CD for Integrated Workflows

GitLab offers a unified platform that combines source code management, CI/CD, and project management. This integrated approach reduces context switching and simplifies tool management for large teams. Its Auto DevOps feature automatically detects project types and configures pipelines accordingly.

The platform’s built-in container registry and Kubernetes integration make it ideal for organisations adopting cloud-native architectures. Compliance features like merge request approvals and audit logs help enterprises adhere to regulatory standards.

GitHub Actions for Cloud-Native Teams

For teams already using GitHub, GitHub Actions is a natural choice. With over 10,000 pre-built actions available in its marketplace, it simplifies pipeline development. The matrix build feature enables testing across multiple operating systems and runtime versions simultaneously.

GitHub Actions integrates tightly with GitHub’s security features, such as dependency scanning and secret detection, making it a strong option for organisations focused on security throughout their development lifecycle.

Kubernetes-Native Solutions

Tools like Tekton and Argo Workflows are designed to run directly on Kubernetes, making them ideal for organisations with container-first strategies. They can automatically scale resources based on demand. Tekton pipelines are defined as Kubernetes resources, allowing teams to apply infrastructure-as-code practices to their CI/CD processes.

Automation and Infrastructure as Code

Once the right CI/CD tools are in place, automating environment provisioning and configuration can streamline deployments across all stages.

Automated Environment Provisioning

Tools like Terraform and AWS CloudFormation enable enterprise pipelines to automatically provision testing and deployment environments. These tools can create complete application stacks - including databases, load balancers, and monitoring systems - within minutes. Automation extends to the entire environment lifecycle, from setup to updates and eventual teardown.

Containerised Build Runners

Containerised build runners ensure consistent and isolated build environments. Docker-based runners can be pre-configured with specific tool versions, dependencies, and security configurations. Kubernetes-based systems can dynamically scale build pods to meet demand, balancing performance and cost.

Configuration Management Integration

Integrating configuration management tools like Ansible, Chef, or Puppet ensures that applications are deployed with the correct settings for each environment. This eliminates manual configuration steps and reduces errors. Advanced setups may use Helm for Kubernetes or AWS Systems Manager Parameter Store to manage environment-specific configurations separately from application code, enabling seamless deployment across environments.

Automated Security and Compliance

Security processes can be integrated directly into the pipeline. Tools like SonarQube perform static code analysis, while container scanning tools identify vulnerabilities in base images and dependencies. Compliance automation includes creating audit trails, enforcing approval workflows for production deployments, and applying security policies automatically. Tools like Open Policy Agent enforce organisational policies as code, ensuring consistent application across deployments.

Tool Comparison for Scalability and Cost

Selecting tools that balance scalability and cost is crucial for enterprise pipelines.

| Tool | Scalability | Integration Options | Cost Model | Best For |

|---|---|---|---|---|

| Jenkins | Very High | Extensive (1,800+ plugins) | Self-hosted (infrastructure costs) | Complex workflows, legacy integration |

| GitLab CI/CD | High | Built-in + marketplace | Tiered SaaS or self-hosted | Integrated development lifecycle |

| GitHub Actions | High | 10,000+ marketplace actions | Pay-per-use (minutes) | GitHub-centric workflows |

| Azure DevOps | High | Microsoft ecosystem focus | Tiered pricing | Microsoft technology stack |

| Tekton | Very High | Kubernetes-native | Infrastructure costs only | Cloud-native, container-first |

Cost Considerations

The total cost of ownership goes beyond licensing fees. Self-hosted tools like Jenkins require ongoing management of infrastructure, security updates, and backups. Cloud-based solutions offer predictable pricing but can become costly for compute-intensive builds.

A hybrid approach often works best, using cloud services for development and testing while maintaining on-premises infrastructure for production. This is particularly useful for organisations with data sovereignty or strict compliance requirements. Build caching can also significantly reduce costs and build times by reusing dependencies, compiled artefacts, and test results.

For enterprises undergoing large-scale DevOps transformations, expert advice on tool selection and implementation can save time and resources. Hokstad Consulting specialises in helping organisations identify and implement the right tools to meet their current needs while planning for future growth, ensuring both functionality and cost-efficiency.

Governance, Cost Control, and Continuous Improvement

Managing enterprise CI/CD pipelines effectively requires a balance of governance, cost management, and adaptability. Without proper oversight, these pipelines can quickly become sources of inefficiency, security vulnerabilities, and unnecessary expenses.

Governance and Compliance in Pipelines

Audit Trails and Automated Compliance

For enterprises, keeping a detailed record of every deployment, configuration change, and access event is non-negotiable. These audit trails are essential for automated compliance, ensuring processes meet regulatory standards like GDPR for data handling or PCI DSS for financial systems. Tools such as Open Policy Agent allow organisations to enforce these requirements through code, maintaining consistency across all projects.

Role-Based Access and Approval Processes

Good governance starts with clear access controls. Role-based access ensures developers can manage their builds but prevents unauthorised production deployments. Senior engineers might have broader permissions, while infrastructure changes remain under the operations team’s control.

Critical deployments benefit from multi-stage approval workflows. For example, production releases might need sign-offs from both technical and business leaders, while emergency fixes can follow expedited paths that still maintain audit trails. Automating these workflows based on risk - allowing low-risk changes to proceed automatically while flagging high-impact ones for review - adds another layer of safety.

Secrets Management and Security Standards

Centralising secrets management strengthens pipeline security. Security measures should be integrated at every stage, from dependency vulnerability scans to static code analysis and container image checks. Any failed security tests should block deployments until resolved. Regular audits of pipeline configurations can also catch potential issues before they escalate into major risks.

Cost Control Strategies

Governance and cost control go hand-in-hand to ensure pipelines remain efficient and affordable.

Optimising Resources and Build Processes

Pipeline expenses can escalate rapidly without careful resource management. Build agents consume compute power, artefacts take up storage, and cloud services charge for usage time. Fine-tuning these elements can significantly cut costs.

Using build caching to reuse previously compiled dependencies and test results saves both time and money. Parallel execution can speed up builds but requires careful planning to avoid overloading resources. Selecting the right size for build agents ensures performance without overspending on unnecessary capacity.

Cloud Cost Management

Understanding where money is spent is key to controlling cloud-related costs. Analysing usage patterns, identifying inefficiencies, and applying automated controls can lead to significant savings. For instance, shutting down development environments outside business hours, using spot instances for non-critical tasks, or setting resource quotas can all help manage expenses.

Scheduling non-urgent tasks, like test builds, during off-peak hours is another cost-saving measure. Unlike production deployments, these workloads often have the flexibility to run at less expensive times.

For enterprises aiming to cut their CI/CD costs while maintaining performance, Hokstad Consulting's cloud cost engineering services can identify savings of up to 30-50%. Their expertise in DevOps and cost management helps businesses design scalable, cost-efficient pipelines.

Tracking and Allocating Costs

Monitoring costs by project, team, or environment provides valuable insights into resource consumption. This data supports better budget planning and highlights areas for optimisation.

Introducing chargeback models can also encourage responsible resource usage. By linking costs directly to the teams generating them, organisations can promote efficiency. However, this approach needs careful implementation to avoid unintended consequences, such as teams cutting corners on quality or security to save money.

Continuous Improvement for Evolving Needs

Cost control is just one piece of the puzzle - continuous improvement ensures pipelines stay relevant as business needs shift.

Using Metrics to Drive Optimisation

Improving pipelines starts with tracking metrics like build times, deployment frequency, failure rates, and recovery times. These indicators reveal bottlenecks and guide optimisation efforts.

Advanced analytics can uncover patterns, such as resource contention during specific times or higher failure rates for certain changes. Acting on these insights allows teams to address issues proactively instead of reacting to problems after they occur.

Feedback and Collaboration

Regular team retrospectives provide a platform for discussing challenges and brainstorming solutions. Collaboration between development, operations, and security teams ensures pipeline updates meet the needs of all stakeholders. Periodic reviews of pipeline architecture and tools help identify when changes are necessary due to new technologies or business requirements.

Scaling with Organisational Growth

As organisations expand, their CI/CD pipelines must adapt. New compliance rules, geographic regions, and tech stacks all influence pipeline design. Regular architecture reviews help ensure pipelines remain effective rather than becoming outdated constraints.

Pipeline updates should follow an incremental approach, focusing on small, manageable changes rather than disruptive overhauls. This method aligns with earlier modular pipeline principles, allowing systems to evolve smoothly alongside organisational growth.

Emerging technologies like AI and machine learning are also reshaping pipeline strategies. AI-driven testing can catch issues earlier, while machine learning models optimise resource allocation and predict potential failures. Enterprises exploring these tools are positioning themselves for more efficient and reliable pipelines.

Ultimately, successful enterprise CI/CD pipelines integrate governance, cost control, and continuous improvement into a unified strategy. This approach keeps pipelines secure, efficient, and scalable, even as organisations grow and change.

Building Scalable CI/CD Pipelines for Enterprise Success

Creating scalable CI/CD pipelines in enterprise environments is all about striking the right balance - combining technical expertise with practical business considerations. This involves using well-established design patterns, choosing the right tools, automating effectively, and embracing continuous improvement.

Design patterns are the backbone of any scalable pipeline. Enterprises that lean into modular, microservices-based designs avoid the headaches that come with monolithic systems. For instance, the fan-out pattern is a game-changer for organisations juggling multiple product lines, while pipeline-as-code ensures consistency across teams and environments. These strategies don’t just simplify technical workflows - they also create room for growth without adding unnecessary complexity.

Tool choice and automation play a critical role in determining how effective and scalable a pipeline can be. Many enterprise pipelines rely on infrastructure as code, not just for deployments but for configuring the entire pipeline itself. This approach reduces configuration drift and allows successful setups to be reused across projects. Platforms like Kubernetes bring resource isolation and dynamic scaling into the mix, offering capabilities that traditional build agents simply can’t match. When paired with tactics like build caching, parallel execution, and resource optimisation, these tools deliver both efficiency and scalability. Plus, this focus on tools naturally extends to areas like governance and cost management, helping to keep budgets in check.

Cost management and governance are key for any enterprise-grade pipeline. By establishing cost tracking and chargeback models early on, organisations encourage teams to use resources wisely. This not only keeps expenses under control but also promotes accountability across the board.

Integrating security and compliance into the pipeline is non-negotiable for enterprises. By embedding practices like security scanning, secrets management, and audit trails into every stage, businesses can build pipelines that are not only scalable but also secure and compliant. These measures also set the stage for ongoing improvements in performance and reliability.

Continuous improvement is what keeps pipelines future-ready. Enterprises that regularly assess pipeline metrics, collect feedback from development teams, and adapt to changing needs tend to outperform those that treat their pipelines as static systems. Emerging technologies like AI-driven testing and machine learning-based resource optimisation are already shaping the future of pipeline management, helping enterprises stay ahead of the curve.

The goal isn’t to adopt every tool or best practice - it’s about creating a system that grows with your organisation while staying reliable, secure, and cost-efficient. For enterprises looking to refine their DevOps strategies and manage cloud costs more effectively, Hokstad Consulting offers expertise in pipeline design and cost optimisation.

At the end of the day, scalable CI/CD pipelines should enable the business, not hold it back. They empower teams to deliver faster and with greater confidence. This blend of technical know-how and business practicality is what separates pipelines that genuinely scale from those that simply add complexity. By aligning with trends like AI-driven testing, enterprises can ensure their pipelines keep pace with evolving business needs.

FAQs

How can I choose the right CI/CD pipeline design pattern for my organisation?

Choosing the best CI/CD pipeline design pattern for your organisation hinges on factors like deployment complexity, scalability needs, and team dynamics. Popular approaches include multi-stage pipelines, feature branch workflows, and blue-green deployments.

To decide effectively, evaluate your organisation's specific needs, such as how often you deploy, the level of automation you require, and your approach to managing risks. For instance, if your team handles frequent releases, a blue-green deployment strategy can minimise downtime. On the other hand, if isolating code changes is a priority, feature branch workflows might be a better fit. The goal is to ensure your pipeline supports speed, reliability, and maintainability.

Don’t forget to assess whether your infrastructure can handle secure, automated processes across various environments. Aligning your pipeline with these considerations will streamline deployment and better support large-scale operations.

What are the key factors to consider for effective governance and cost management in CI/CD pipelines?

To keep your CI/CD pipelines running smoothly and cost-effectively, adopting policies as code is a smart move. This method helps automate compliance, enforce standards, and cut down on manual oversight. By embedding these policies directly into your pipelines, you ensure consistency and reduce the risk of errors creeping in.

Beyond that, focusing on strategic resource allocation, continuous monitoring, and automated policy checks is key. These steps not only help optimise how infrastructure is used but also keep your pipelines secure and compliant, all while avoiding unnecessary expenses. Regularly reviewing and updating your pipeline configurations can further improve efficiency and scalability, making sure they align with your organisation's specific needs.

How can enterprises design CI/CD pipelines that scale and adapt to evolving business needs?

To build CI/CD pipelines that can handle growth and adapt to shifting business needs, enterprises should prioritise a modular design. This approach enables individual components to be scaled or updated independently, which adds flexibility and minimises downtime.

Integrating automation and rigorous testing at every stage is key to keeping processes efficient and reliable. Tools like Jenkins and Azure DevOps are excellent for providing centralised management and ensuring scalability. At the same time, encouraging a culture of continuous learning and collaboration helps teams to refine workflows and drive innovation.

By blending these practices, enterprises can create pipelines that not only address current requirements but are also ready to handle future challenges and growth.